背景

XX實(shí)例(一主一從)xxx告警中每天凌晨在報(bào)SLA報(bào)警,該報(bào)警的意思是存在一定的主從延遲(若在此時(shí)發(fā)生主從切換,需要長(zhǎng)時(shí)間才可以完成切換,要追延遲來(lái)保證主從數(shù)據(jù)的一致性)

XX實(shí)例的慢查詢數(shù)量最多(執(zhí)行時(shí)間超過(guò)1s的sql會(huì)被記錄),XX應(yīng)用那方每天晚上在做刪除一個(gè)月前數(shù)據(jù)的任務(wù)

基于 Spring Boot + MyBatis Plus + Vue & Element 實(shí)現(xiàn)的后臺(tái)管理系統(tǒng) + 用戶小程序,支持 RBAC 動(dòng)態(tài)權(quán)限、多租戶、數(shù)據(jù)權(quán)限、工作流、三方登錄、支付、短信、商城等功能

項(xiàng)目地址:https://github.com/YunaiV/ruoyi-vue-pro

視頻教程:https://doc.iocoder.cn/video/

分析

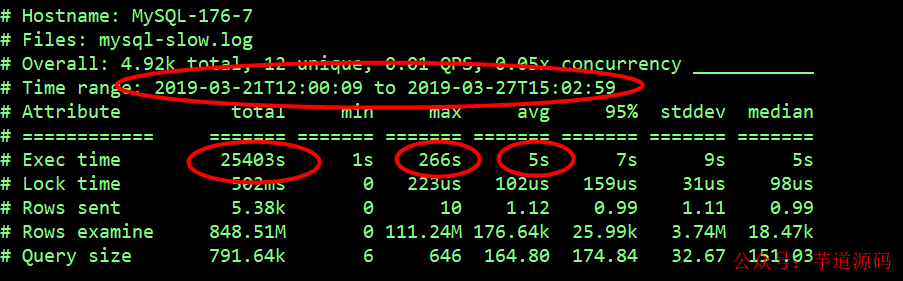

使用pt-query-digest工具分析最近一周的mysql-slow.log

pt-query-digest--since=148hmysql-slow.log|less

結(jié)果第一部分

最近一個(gè)星期內(nèi),總共記錄的慢查詢執(zhí)行花費(fèi)時(shí)間為25403s,最大的慢sql執(zhí)行時(shí)間為266s,平均每個(gè)慢sql執(zhí)行時(shí)間5s,平均掃描的行數(shù)為1766萬(wàn)

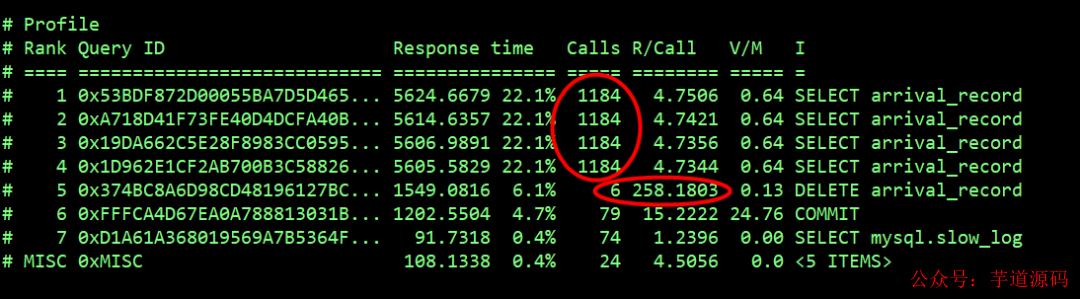

結(jié)果第二部分

select arrival_record操作記錄的慢查詢數(shù)量最多有4萬(wàn)多次,平均響應(yīng)時(shí)間為4s,delete arrival_record記錄了6次,平均響應(yīng)時(shí)間258s。

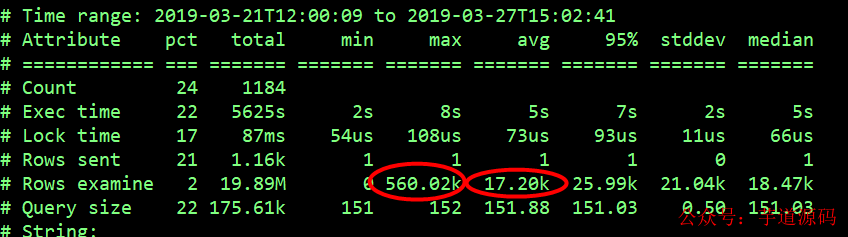

select xxx_record語(yǔ)句

select arrival_record 慢查詢語(yǔ)句都類似于如下所示,where語(yǔ)句中的參數(shù)字段是一樣的,傳入的參數(shù)值不一樣select count(*) from arrival_record where product_id=26 and receive_time between '2019-03-25 1400' and '2019-03-25 1500' and receive_spend_ms>=0G

select arrival_record 語(yǔ)句在mysql中最多掃描的行數(shù)為5600萬(wàn)、平均掃描的行數(shù)為172萬(wàn),推斷由于掃描的行數(shù)多導(dǎo)致的執(zhí)行時(shí)間長(zhǎng)

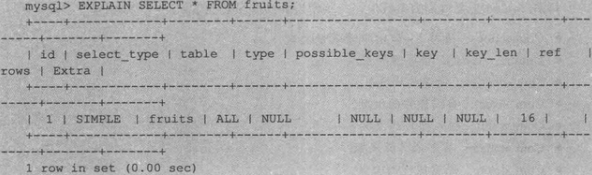

查看執(zhí)行計(jì)劃

explainselectcount(*)fromarrival_recordwhereproduct_id=26andreceive_timebetween'2019-03-251400'and'2019-03-251500'andreceive_spend_ms>=0G; ***************************1.row*************************** id:1 select_type:SIMPLE table:arrival_record partitions:NULL type:ref possible_keys:IXFK_arrival_record key:IXFK_arrival_record key_len:8 ref:const rows:32261320 filtered:3.70 Extra:Usingindexcondition;Usingwhere 1rowinset,1warning(0.00sec)

用到了索引IXFK_arrival_record,但預(yù)計(jì)掃描的行數(shù)很多有3000多w行

showindexfromarrival_record; +----------------+------------+---------------------+--------------+--------------+-----------+-------------+----------+--------+------+------------+---------+---------------+ |Table|Non_unique|Key_name|Seq_in_index|Column_name|Collation|Cardinality|Sub_part|Packed|Null|Index_type|Comment|Index_comment| +----------------+------------+---------------------+--------------+--------------+-----------+-------------+----------+--------+------+------------+---------+---------------+ |arrival_record|0|PRIMARY|1|id|A|107990720|NULL|NULL||BTREE||| |arrival_record|1|IXFK_arrival_record|1|product_id|A|1344|NULL|NULL||BTREE||| |arrival_record|1|IXFK_arrival_record|2|station_no|A|22161|NULL|NULL|YES|BTREE||| |arrival_record|1|IXFK_arrival_record|3|sequence|A|77233384|NULL|NULL||BTREE||| |arrival_record|1|IXFK_arrival_record|4|receive_time|A|65854652|NULL|NULL|YES|BTREE||| |arrival_record|1|IXFK_arrival_record|5|arrival_time|A|73861904|NULL|NULL|YES|BTREE||| +----------------+------------+---------------------+--------------+--------------+-----------+-------------+----------+--------+------+------------+---------+---------------+ showcreatetablearrival_record; .......... arrival_spend_msbigint(20)DEFAULTNULL, total_spend_msbigint(20)DEFAULTNULL, PRIMARYKEY(id), KEYIXFK_arrival_record(product_id,station_no,sequence,receive_time,arrival_time)USINGBTREE, CONSTRAINTFK_arrival_record_productFOREIGNKEY(product_id)REFERENCESproduct(id)ONDELETENOACTIONONUPDATENOACTION )ENGINE=InnoDBAUTO_INCREMENT=614538979DEFAULTCHARSET=utf8COLLATE=utf8_bin|

該表總記錄數(shù)約1億多條,表上只有一個(gè)復(fù)合索引,product_id字段基數(shù)很小,選擇性不好

傳入的過(guò)濾條件 where product_id=26 and receive_time between '2019-03-25 1400' and '2019-03-25 1500' and receive_spend_ms>=0 沒(méi)有station_nu字段,使用不到復(fù)合索引 IXFK_arrival_record的 product_id,station_no,sequence,receive_time 這幾個(gè)字段

根據(jù)最左前綴原則,select arrival_record只用到了復(fù)合索引IXFK_arrival_record的第一個(gè)字段product_id,而該字段選擇性很差,導(dǎo)致掃描的行數(shù)很多,執(zhí)行時(shí)間長(zhǎng)

receive_time字段的基數(shù)大,選擇性好,可對(duì)該字段單獨(dú)建立索引,select arrival_record sql就會(huì)使用到該索引

現(xiàn)在已經(jīng)知道了在慢查詢中記錄的select arrival_record where語(yǔ)句傳入的參數(shù)字段有 product_id,receive_time,receive_spend_ms,還想知道對(duì)該表的訪問(wèn)有沒(méi)有通過(guò)其它字段來(lái)過(guò)濾了?

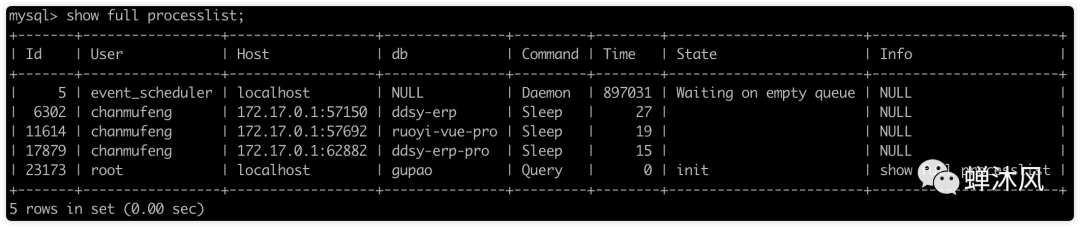

神器tcpdump出場(chǎng)的時(shí)候到了

使用tcpdump抓包一段時(shí)間對(duì)該表的select語(yǔ)句

tcpdump-ibond0-s0-l-w-dstport3316|strings|grepselect|egrep-i'arrival_record'>/tmp/select_arri.log

獲取select 語(yǔ)句中from 后面的where條件語(yǔ)句

IFS_OLD=$IFS

IFS=$'

'

foriin`cat/tmp/select_arri.log`;doecho${i#*'from'};done|less

IFS=$IFS_OLD

arrival_recordarrivalrec0_wherearrivalrec0_.sequence='2019-03-2708:40'andarrivalrec0_.product_id=17andarrivalrec0_.station_no='56742' arrival_recordarrivalrec0_wherearrivalrec0_.sequence='2019-03-2708:40'andarrivalrec0_.product_id=22andarrivalrec0_.station_no='S7100' arrival_recordarrivalrec0_wherearrivalrec0_.sequence='2019-03-2708:40'andarrivalrec0_.product_id=24andarrivalrec0_.station_no='V4631' arrival_recordarrivalrec0_wherearrivalrec0_.sequence='2019-03-2708:40'andarrivalrec0_.product_id=22andarrivalrec0_.station_no='S9466' arrival_recordarrivalrec0_wherearrivalrec0_.sequence='2019-03-2708:40'andarrivalrec0_.product_id=24andarrivalrec0_.station_no='V4205' arrival_recordarrivalrec0_wherearrivalrec0_.sequence='2019-03-2708:40'andarrivalrec0_.product_id=24andarrivalrec0_.station_no='V4105' arrival_recordarrivalrec0_wherearrivalrec0_.sequence='2019-03-2708:40'andarrivalrec0_.product_id=24andarrivalrec0_.station_no='V4506' arrival_recordarrivalrec0_wherearrivalrec0_.sequence='2019-03-2708:40'andarrivalrec0_.product_id=24andarrivalrec0_.station_no='V4617' arrival_recordarrivalrec0_wherearrivalrec0_.sequence='2019-03-2708:40'andarrivalrec0_.product_id=22andarrivalrec0_.station_no='S8356' arrival_recordarrivalrec0_wherearrivalrec0_.sequence='2019-03-2708:40'andarrivalrec0_.product_id=22andarrivalrec0_.station_no='S8356' select該表where條件中有product_id,station_no,sequence字段,可以使用到復(fù)合索引IXFK_arrival_record的前三個(gè)字段

綜上所示,優(yōu)化方法為,刪除復(fù)合索引IXFK_arrival_record,建立復(fù)合索引idx_sequence_station_no_product_id,并建立單獨(dú)索引indx_receive_time

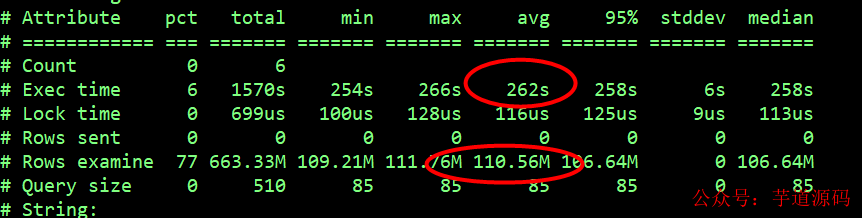

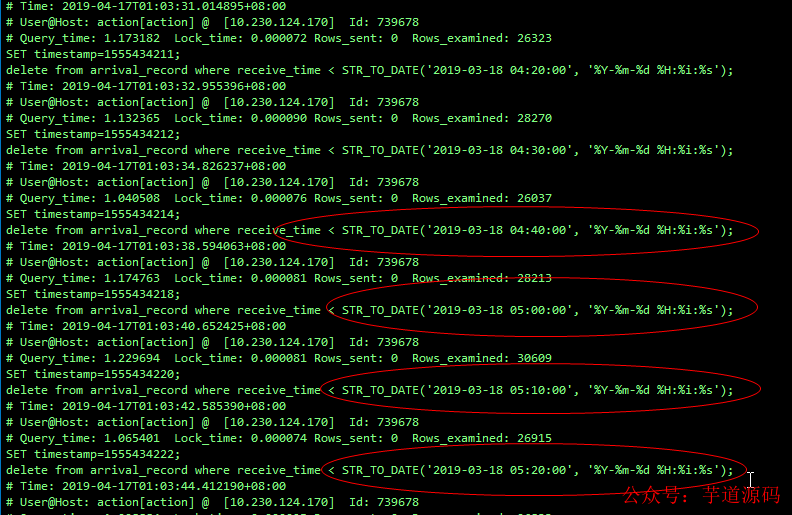

delete xxx_record語(yǔ)句

該delete操作平均掃描行數(shù)為1.1億行,平均執(zhí)行時(shí)間是262s

delete語(yǔ)句如下所示,每次記錄的慢查詢傳入的參數(shù)值不一樣

deletefromarrival_recordwherereceive_time

執(zhí)行計(jì)劃

explainselect*fromarrival_recordwherereceive_time

該delete語(yǔ)句沒(méi)有使用索引(沒(méi)有合適的索引可用),走的全表掃描,導(dǎo)致執(zhí)行時(shí)間長(zhǎng)

優(yōu)化方法也是 建立單獨(dú)索引indx_receive_time(receive_time)

基于 Spring Cloud Alibaba + Gateway + Nacos + RocketMQ + Vue & Element 實(shí)現(xiàn)的后臺(tái)管理系統(tǒng) + 用戶小程序,支持 RBAC 動(dòng)態(tài)權(quán)限、多租戶、數(shù)據(jù)權(quán)限、工作流、三方登錄、支付、短信、商城等功能

項(xiàng)目地址:https://github.com/YunaiV/yudao-cloud

視頻教程:https://doc.iocoder.cn/video/

測(cè)試

拷貝arrival_record表到測(cè)試實(shí)例上進(jìn)行刪除重新索引操作XX實(shí)例arrival_record表信息

du-sh/datas/mysql/data/3316/cq_new_cimiss/arrival_record* 12K/datas/mysql/data/3316/cq_new_cimiss/arrival_record.frm 48G/datas/mysql/data/3316/cq_new_cimiss/arrival_record.ibd selectcount()fromcq_new_cimiss.arrival_record; +-----------+ |count()| +-----------+ |112294946| +-----------+ 1億多記錄數(shù) SELECT table_name, CONCAT(FORMAT(SUM(data_length)/1024/1024,2),'M')ASdbdata_size, CONCAT(FORMAT(SUM(index_length)/1024/1024,2),'M')ASdbindex_size, CONCAT(FORMAT(SUM(data_length+index_length)/1024/1024/1024,2),'G')AStable_size(G), AVG_ROW_LENGTH,table_rows,update_time FROM information_schema.tables WHEREtable_schema='cq_new_cimiss'andtable_name='arrival_record'; +----------------+-------------+--------------+------------+----------------+------------+---------------------+ |table_name|dbdata_size|dbindex_size|table_size(G)|AVG_ROW_LENGTH|table_rows|update_time| +----------------+-------------+--------------+------------+----------------+------------+---------------------+ |arrival_record|18,268.02M|13,868.05M|31.38G|175|109155053|2019-03-2612:40:17| +----------------+-------------+--------------+------------+----------------+------------+---------------------+

磁盤占用空間48G,mysql中該表大小為31G,存在17G左右的碎片,大多由于刪除操作造成的(記錄被刪除了,空間沒(méi)有回收)

備份還原該表到新的實(shí)例中,刪除原來(lái)的復(fù)合索引,重新添加索引進(jìn)行測(cè)試

mydumper并行壓縮備份

user=root passwd=xxxx socket=/datas/mysql/data/3316/mysqld.sock db=cq_new_cimiss table_name=arrival_record backupdir=/datas/dump_$table_name mkdir-p$backupdir nohupecho`date+%T`&&mydumper-u$user-p$passwd-S$socket-B$db-c-T$table_name-o$backupdir-t32-r2000000&&echo`date+%T`&

并行壓縮備份所花時(shí)間(52s)和占用空間(1.2G,實(shí)際該表占用磁盤空間為48G,mydumper并行壓縮備份壓縮比相當(dāng)高!)

Starteddumpat:2019-03-2612:46:04 ........ Finisheddumpat:2019-03-2612:46:56 du-sh/datas/dump_arrival_record/ 1.2G/datas/dump_arrival_record/

拷貝dump數(shù)據(jù)到測(cè)試節(jié)點(diǎn)

scp-rp/datas/dump_arrival_recordroot@10.230.124.19:/datas

多線程導(dǎo)入數(shù)據(jù)

timemyloader-uroot-S/datas/mysql/data/3308/mysqld.sock-P3308-proot-Btest-d/datas/dump_arrival_record-t32

real 126m42.885suser 1m4.543ssys 0m4.267s

邏輯導(dǎo)入該表后磁盤占用空間

du-h-d1/datas/mysql/data/3308/test/arrival_record.* 12K/datas/mysql/data/3308/test/arrival_record.frm 30G/datas/mysql/data/3308/test/arrival_record.ibd 沒(méi)有碎片,和mysql的該表的大小一致 cp-rp/datas/mysql/data/3308/datas

分別使用online DDL和 pt-osc工具來(lái)做刪除重建索引操作先刪除外鍵,不刪除外鍵,無(wú)法刪除復(fù)合索引,外鍵列屬于復(fù)合索引中第一列

nohupbash/tmp/ddl_index.sh& 2019-04-04-10:41:39beginstopmysqld_3308 2019-04-04-10:41:41beginrm-rfdatadirandcp-rpdatadir_bak 2019-04-04-10:46:53startmysqld_3308 2019-04-04-10:46:59onlineddlbegin 2019-04-04-11:20:34onlieddlstop 2019-04-04-11:20:34beginstopmysqld_3308 2019-04-04-11:20:36beginrm-rfdatadirandcp-rpdatadir_bak 2019-04-04-11:22:48startmysqld_3308 2019-04-04-11:22:53pt-oscbegin 2019-04-04-12:19:15pt-oscstop onlineddl花費(fèi)時(shí)間為34分鐘,pt-osc花費(fèi)時(shí)間為57分鐘,使用onlneddl時(shí)間約為pt-osc工具時(shí)間的一半

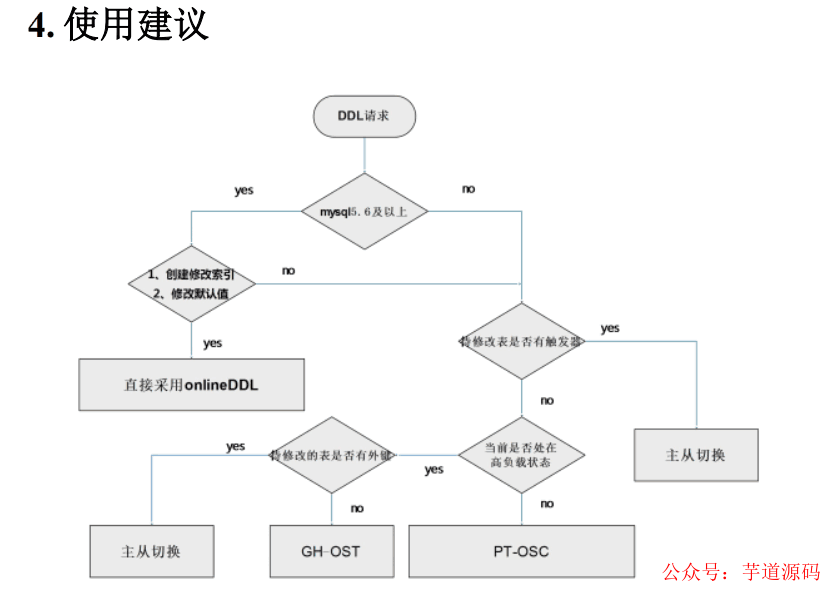

*做DDL 參考 *

實(shí)施

由于是一主一從實(shí)例,應(yīng)用是連接的vip,刪除重建索引采用online ddl來(lái)做。停止主從復(fù)制后,先在從實(shí)例上做(不記錄binlog),主從切換,再在新切換的從實(shí)例上做(不記錄binlog)

functionred_echo(){ localwhat="$*" echo-e"$(date+%F-%T)${what}" } functioncheck_las_comm(){ if["$1"!="0"];then red_echo"$2" echo"exit1" exit1 fi } red_echo"stopslave" mysql-uroot-p$passwd--socket=/datas/mysql/data/${port}/mysqld.sock-e"stopslave" check_las_comm"$?""stopslavefailed" red_echo"onlineddlbegin" mysql-uroot-p$passwd--socket=/datas/mysql/data/${port}/mysqld.sock-e"setsql_log_bin=0;selectnow()asddl_start;ALTERTABLE$db_.`${table_name}`DROPFOREIGNKEYFK_arrival_record_product,dropindexIXFK_arrival_record,addindexidx_product_id_sequence_station_no(product_id,sequence,station_no),addindexidx_receive_time(receive_time);selectnow()asddl_stop">>${log_file}2>&1 red_echo"onlieddlstop" red_echo"addforeignkey" mysql-uroot-p$passwd--socket=/datas/mysql/data/${port}/mysqld.sock-e"setsql_log_bin=0;ALTERTABLE$db_.${table_name}ADDCONSTRAINT_FK_${table_name}_productFOREIGNKEY(product_id)REFERENCEScq_new_cimiss.product(id)ONDELETENOACTIONONUPDATENOACTION;">>${log_file}2>&1 check_las_comm"$?""addforeignkeyerror" red_echo"addforeignkeystop" red_echo"startslave" mysql-uroot-p$passwd--socket=/datas/mysql/data/${port}/mysqld.sock-e"startslave" check_las_comm"$?""startslavefailed"

*執(zhí)行時(shí)間 *

2019-04-08-1136 stop slavemysql: [Warning] Using a password on the command line interface can be insecure.ddl_start2019-04-08 1136ddl_stop2019-04-08 11132019-04-08-1113 onlie ddl stop2019-04-08-1113 add foreign keymysql: [Warning] Using a password on the command line interface can be insecure.2019-04-08-1248 add foreign key stop2019-04-08-1248 start slave

*再次查看delete 和select語(yǔ)句的執(zhí)行計(jì)劃 *

explainselectcount(*)fromarrival_recordwherereceive_time=0G; ***************************1.row*************************** id:1 select_type:SIMPLE table:arrival_record partitions:NULL type:range possible_keys:idx_product_id_sequence_station_no,idx_receive_time key:idx_receive_time key_len:6 ref:NULL rows:291448 filtered:16.66 Extra:Usingindexcondition;Usingwhere 都使用到了idx_receive_time索引,掃描的行數(shù)大大降低

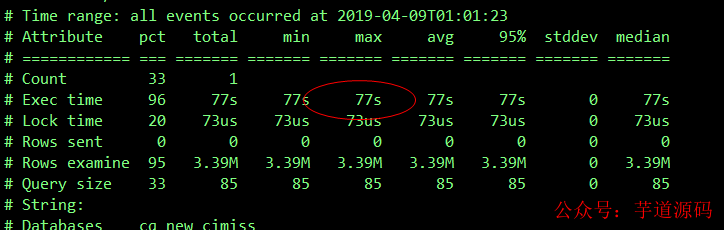

索引優(yōu)化后

delete 還是花費(fèi)了77s時(shí)間

deletefromarrival_recordwherereceive_time

delete 語(yǔ)句通過(guò)receive_time的索引刪除300多萬(wàn)的記錄花費(fèi)77s時(shí)間*

delete大表優(yōu)化為小批量刪除

*應(yīng)用端已優(yōu)化成每次刪除10分鐘的數(shù)據(jù)(每次執(zhí)行時(shí)間1s左右),xxx中沒(méi)在出現(xiàn)SLA(主從延遲告警) *

*另一個(gè)方法是通過(guò)主鍵的順序每次刪除20000條記錄 *

#得到滿足時(shí)間條件的最大主鍵ID #通過(guò)按照主鍵的順序去順序掃描小批量刪除數(shù)據(jù) #先執(zhí)行一次以下語(yǔ)句 SELECTMAX(id)INTO@need_delete_max_idFROM`arrival_record`WHEREreceive_time<'2019-03-01'?; ?DELETE?FROM?arrival_record?WHERE?id<@need_delete_max_id?LIMIT?20000; ?select?ROW_COUNT();??#返回20000 #執(zhí)行小批量delete后會(huì)返回row_count(),?刪除的行數(shù) #程序判斷返回的row_count()是否為0,不為0執(zhí)行以下循環(huán),為0退出循環(huán),刪除操作完成 ?DELETE?FROM?arrival_record?WHERE?id<@need_delete_max_id?LIMIT?20000; ?select?ROW_COUNT(); #程序睡眠0.5s

總結(jié)

表數(shù)據(jù)量太大時(shí),除了關(guān)注訪問(wèn)該表的響應(yīng)時(shí)間外,還要關(guān)注對(duì)該表的維護(hù)成本(如做DDL表更時(shí)間太長(zhǎng),delete歷史數(shù)據(jù))。

對(duì)大表進(jìn)行DDL操作時(shí),要考慮表的實(shí)際情況(如對(duì)該表的并發(fā)表,是否有外鍵)來(lái)選擇合適的DDL變更方式。

對(duì)大數(shù)據(jù)量表進(jìn)行delete,用小批量刪除的方式,減少對(duì)主實(shí)例的壓力和主從延遲。

審核編輯:劉清

-

SQL

+關(guān)注

關(guān)注

1文章

760瀏覽量

44077 -

DDL

+關(guān)注

關(guān)注

0文章

12瀏覽量

6320 -

MYSQL數(shù)據(jù)庫(kù)

+關(guān)注

關(guān)注

0文章

95瀏覽量

9382

原文標(biāo)題:面試官:MySQL 上億大表,如何深度優(yōu)化?

文章出處:【微信號(hào):芋道源碼,微信公眾號(hào):芋道源碼】歡迎添加關(guān)注!文章轉(zhuǎn)載請(qǐng)注明出處。

發(fā)布評(píng)論請(qǐng)先 登錄

相關(guān)推薦

mysql中文參考手冊(cè)chm

MySQL表分區(qū)類型及介紹

Mysql優(yōu)化選擇最佳索引規(guī)則

MySQL優(yōu)化之查詢性能優(yōu)化之查詢優(yōu)化器的局限性與提示

MySQL數(shù)據(jù)庫(kù):理解MySQL的性能優(yōu)化、優(yōu)化查詢

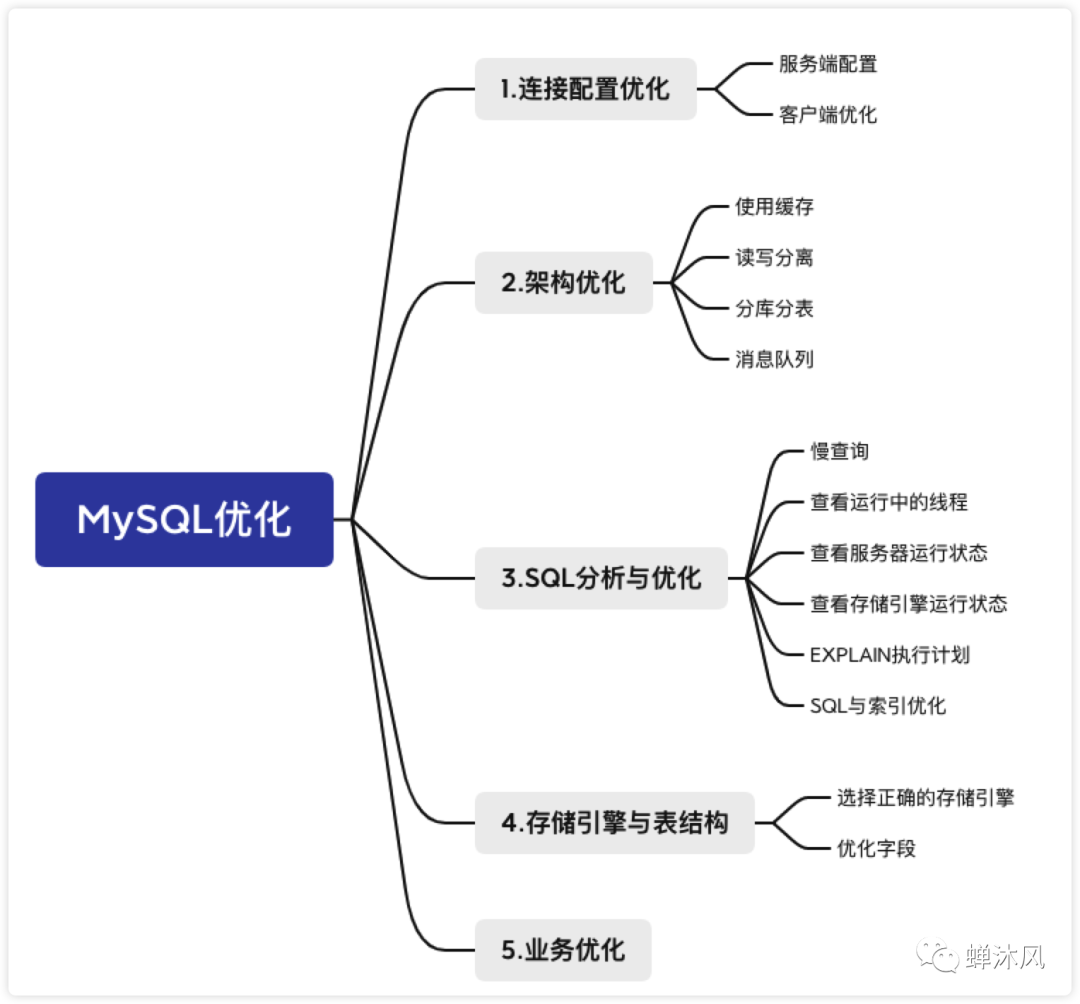

你會(huì)從哪些維度進(jìn)行MySQL性能優(yōu)化?1

你會(huì)從哪些維度進(jìn)行MySQL性能優(yōu)化?2

MySQL上億大表如何深度優(yōu)化呢

MySQL上億大表如何深度優(yōu)化呢

評(píng)論