作者:京東科技 劉恩浩

一、背景

基于K8s集群的私有化交付方案中,日志收集采用了ilogtail+logstash+kafka+es方案,其中ilogtail負責日志收集,logstash負責對數據轉換,kafka負責對日志傳遞中的消峰進而減少es的寫入壓力,es用來保存日志數據。在私有化交付中本方案中涉及的中間件一般需要單獨部署,但是在京東內網環境的部署考慮到kafka和es的高可用,則不推薦采用單獨部署的方案。

二、新方案實踐

1.新方案簡介

在京東內網環境部署K8S并收集日志, kafka+es的替代方案考慮使用JMQ+JES,由于JMQ的底層是基于kafaka、JES的底層基于ES,所以該替換方案理論上是可行的

2.主要架構

數據流向大致如下

應用日志 -> ilogtail -> JMQ -> logstash -> JES

3.如何使用

核心改造點匯總

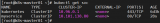

ilogtail nameservers配置

增加解析JMQ域名的nameserver(京東云主機上無法直接解析.local域名)

spec:

spec:

dnsPolicy: "None"

dnsConfig:

nameservers:

- x.x.x.x # 可以解析jmq域名的nameserver

ilogtail flushers配置

調整發送到JMQ到配置

apiVersion: v1 kind: ConfigMap metadata: name: ilogtail-user-cm namespace: elastic-system data: app_stdout.yaml: | flushers: - Type: flusher_stdout OnlyStdout: true - Type: flusher_kafka_v2 Brokers: - nameserver.jmq.jd.local:80 # jmq元數據地址 Topic: ai-middle-k8s-log-prod # jmq topic ClientID: ai4middle4log # Kafka的用戶ID(識別客戶端并設置其唯一性),對應jmq的Group名稱,重要?? (https://ilogtail.gitbook.io/ilogtail-docs/plugins/input/service-kafka#cai-ji-pei-zhi-v2)

logstash kafka&es配置

apiVersion: v1 kind: ConfigMap metadata: name: logstash-config namespace: elastic-system labels: elastic-app: logstash data: logstash.conf: |- input { kafka { bootstrap_servers => ["nameserver.jmq.jd.local:80"] #jmq的元數據地址 group_id => "ai4middle4log" # jmq的Group的名稱 client_id => "ai4middle4log" # jmq的Group的名稱,即jmq的省略了kafka中的client_id概念,用Group名稱代替 consumer_threads => 2 decorate_events => true topics => ["ai-middle-k8s-log-prod"] # jmp的topic auto_offset_reset => "latest" codec => json { charset => "UTF-8" } } } output { elasticsearch { hosts => ["http://x.x.x.x:40000","http://x.x.x.x:40000","http://x.x.x.x:40000"] # es地址 index => "%{[@metadata][kafka][topic]}-%{+YYYY-MM-dd}" # 索引規則 user => "XXXXXX" #jes的用戶名 password => "xxxxx" #jes的密碼 ssl => "false" ssl_certificate_verification => "false" } }

ilogtail 的配置如下

# ilogtail-daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: ilogtail-ds

namespace: elastic-system

labels:

k8s-app: logtail-ds

spec:

selector:

matchLabels:

k8s-app: logtail-ds

template:

metadata:

labels:

k8s-app: logtail-ds

spec:

dnsPolicy: "None"

dnsConfig:

nameservers:

- x.x.x.x # (京東云主機上)可以解析jmq域名的nameserver

tolerations:

- operator: Exists # deploy on all nodes

containers:

- name: logtail

env:

- name: ALIYUN_LOG_ENV_TAGS # add log tags from env

value: _node_name_|_node_ip_

- name: _node_name_

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

- name: _node_ip_

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.hostIP

- name: cpu_usage_limit # iLogtail's self monitor cpu limit

value: "1"

- name: mem_usage_limit # iLogtail's self monitor mem limit

value: "512"

image: dockerhub.ai.jd.local/ai-middleware/ilogtail-community-edition/ilogtail:1.3.1

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

memory: 1Gi

requests:

cpu: 400m

memory: 384Mi

volumeMounts:

- mountPath: /var/run # for container runtime socket

name: run

- mountPath: /logtail_host # for log access on the node

mountPropagation: HostToContainer

name: root

readOnly: true

- mountPath: /usr/local/ilogtail/checkpoint # for checkpoint between container restart

name: checkpoint

- mountPath: /usr/local/ilogtail/user_yaml_config.d # mount config dir

name: user-config

readOnly: true

- mountPath: /usr/local/ilogtail/apsara_log_conf.json

name: apsara-log-config

readOnly: true

subPath: apsara_log_conf.json

dnsPolicy: ClusterFirst

hostNetwork: true

volumes:

- hostPath:

path: /var/run

type: Directory

name: run

- hostPath:

path: /

type: Directory

name: root

- hostPath:

path: /etc/ilogtail-ilogtail-ds/checkpoint

type: DirectoryOrCreate

name: checkpoint

- configMap:

defaultMode: 420

name: ilogtail-user-cm

name: user-config

- configMap:

defaultMode: 420

name: ilogtail-apsara-log-config-cm

name: apsara-log-config

# ilogtail-user-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: ilogtail-user-cm

namespace: elastic-system

data:

app_stdout.yaml: |

enable: true

inputs:

- Type: service_docker_stdout

Stderr: true

Stdout: true

K8sNamespaceRegex: ai-train

ExternalK8sLabelTag:

platform/resource-name: k8s_label_resource-name

platform/task-identify: k8s_label_task-identify

task-id: k8s_label_task-id

run-id: k8s_label_run-id

request-id: k8s_label_request-id

processors:

- Type: processor_rename

SourceKeys:

- k8s_label_resource-name

- k8s_label_task-identify

- k8s_label_task-id

- k8s_label_run-id

- k8s_label_request-id

- _namespace_

- _image_name_

- _pod_uid_

- _pod_name_

- _container_name_

- _container_ip_

- __path__

- _source_

DestKeys:

- resource_name

- task_identify

- task_id

- run_id

- request_id

- namespace

- image_name

- pod_uid

- pod_name

- container_name

- container_ip

- path

- source

flushers:

- Type: flusher_stdout

OnlyStdout: true

- Type: flusher_kafka_v2

Brokers:

- nameserver.jmq.jd.local:80 # jmq元數據地址

Topic: ai-middle-k8s-log-prod # jmq topic

ClientID: ai4middle4log # Kafka的用戶ID(識別客戶端并設置其唯一性),對應jmq的Group名稱,重要?? (https://ilogtail.gitbook.io/ilogtail-docs/plugins/input/service-kafka#cai-ji-pei-zhi-v2)

app_file_log.yaml: |

enable: true

inputs:

- Type: file_log

LogPath: /export/Logs/ai-dt-algorithm-tools

FilePattern: "*.log"

ContainerInfo:

K8sNamespaceRegex: ai-train

ExternalK8sLabelTag:

platform/resource-name: k8s_label_resource-name

platform/task-identify: k8s_label_task-identify

task-id: k8s_label_task-id

run-id: k8s_label_run-id

request-id: k8s_label_request-id

processors:

- Type: processor_add_fields

Fields:

source: file

- Type: processor_rename

SourceKeys:

- __tag__:k8s_label_resource-name

- __tag__:k8s_label_task-identify

- __tag__:k8s_label_task-id

- __tag__:k8s_label_run-id

- __tag__:k8s_label_request-id

- __tag__:_namespace_

- __tag__:_image_name_

- __tag__:_pod_uid_

- __tag__:_pod_name_

- __tag__:_container_name_

- __tag__:_container_ip_

- __tag__:__path__

DestKeys:

- resource_name

- task_identify

- task_id

- run_id

- request_id

- namespace

- image_name

- pod_uid

- pod_name

- container_name

- container_ip

- path

flushers:

- Type: flusher_stdout

OnlyStdout: true

- Type: flusher_kafka_v2

Brokers:

- nameserver.jmq.jd.local:80

Topic: ai-middle-k8s-log-prod

ClientID: ai4middle4log

logstash 的配置如下

# logstash-configmap.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: logstash-config

namespace: elastic-system

labels:

elastic-app: logstash

data:

logstash.conf: |-

input {

kafka {

bootstrap_servers => ["nameserver.jmq.jd.local:80"] #jmq的元數據地址

#group_id => "services"

group_id => "ai4middle4log" # jmq的Group的名稱

client_id => "ai4middle4log" # jmq的Group的名稱,即jmq的省略了kafka中的client_id概念,用Group名稱代替

consumer_threads => 2

decorate_events => true

#topics_pattern => ".*"

topics => ["ai-middle-k8s-log-prod"] # jmp的topic

auto_offset_reset => "latest"

codec => json { charset => "UTF-8" }

}

}

filter {

ruby {

code => "event.set('index_date', event.get('@timestamp').time.localtime + 8*60*60)"

}

ruby {

code => "event.set('message',event.get('contents'))"

}

#ruby {

# code => "event.set('@timestamp',event.get('time').time.localtime)"

#}

mutate {

remove_field => ["contents"]

convert => ["index_date", "string"]

#convert => ["@timestamp", "string"]

gsub => ["index_date", "T.*Z",""]

#gsub => ["@timestamp", "T.*Z",""]

}

}

output {

elasticsearch {

#hosts => ["https://ai-middle-cluster-es-http:9200"]

hosts => ["http://x.x.x.x:40000","http://x.x.x.x:40000","http://x.x.x.x:40000"] # es地址

index => "%{[@metadata][kafka][topic]}-%{+YYYY-MM-dd}" # 索引規則

user => "XXXXXX" #jes的用戶名

password => "xxxxx" #jes的密碼

ssl => "false"

ssl_certificate_verification => "false"

#cacert => "/usr/share/logstash/cert/ca_logstash.cer"

}

stdout {

codec => rubydebug

}

}

4.核心價值

在私有化部署的基礎上通過簡單改造實現了與京東內部中間件的完美融合,使得系統在高可用性上適應性更強、可用范圍更廣。

審核編輯 黃宇

-

集群

+關注

關注

0文章

85瀏覽量

17170

發布評論請先 登錄

相關推薦

全面提升,阿里云Docker/Kubernetes(K8S) 日志解決方案與選型對比

全面提升,阿里云Docker/Kubernetes(K8S) 日志解決方案與選型對比

K8s 從懵圈到熟練 – 集群網絡詳解

OpenStack與K8s結合的兩種方案的詳細介紹和比較

Docker不香嗎為什么還要用K8s

簡單說明k8s和Docker之間的關系

K8S集群服務訪問失敗怎么辦 K8S故障處理集錦

切換k8s上下文有多快

k8s是什么意思?kubeadm部署k8s集群(k8s部署)|PetaExpres

K8s多集群管理:為什么需要多集群、多集群的優勢是什么

k8s云原生開發要求

納尼?自建K8s集群日志收集還能通過JMQ保存到JES

納尼?自建K8s集群日志收集還能通過JMQ保存到JES

評論