隨著AI、大模型的快速發展,傳統的集中式計算已無法應對激增的數據處理需求,而分布式計算是指將一個計算任務分解成多個子任務,由多個計算節點并行地進行計算,并將結果匯總得到最終結果的計算方式,能夠更高效、更穩定、更靈活地處理大規模數據和復雜計算任務,在各行各業中得到了廣泛的應用。

那如何從零到一搭建分布式計算的環境呢?本文將從硬件選型,到服務器側的基礎配置、GPU驅動安裝和集合通訊庫配置,以及無損以太網的啟用,直至大模型導入和訓練測試,帶您跑通搭建分布式計算環境的全流程。

硬件準備

GPU服務器選型

GPU擁有大量的計算核心,可以同時處理多個數據任務,是構成智算中心的關鍵硬件。

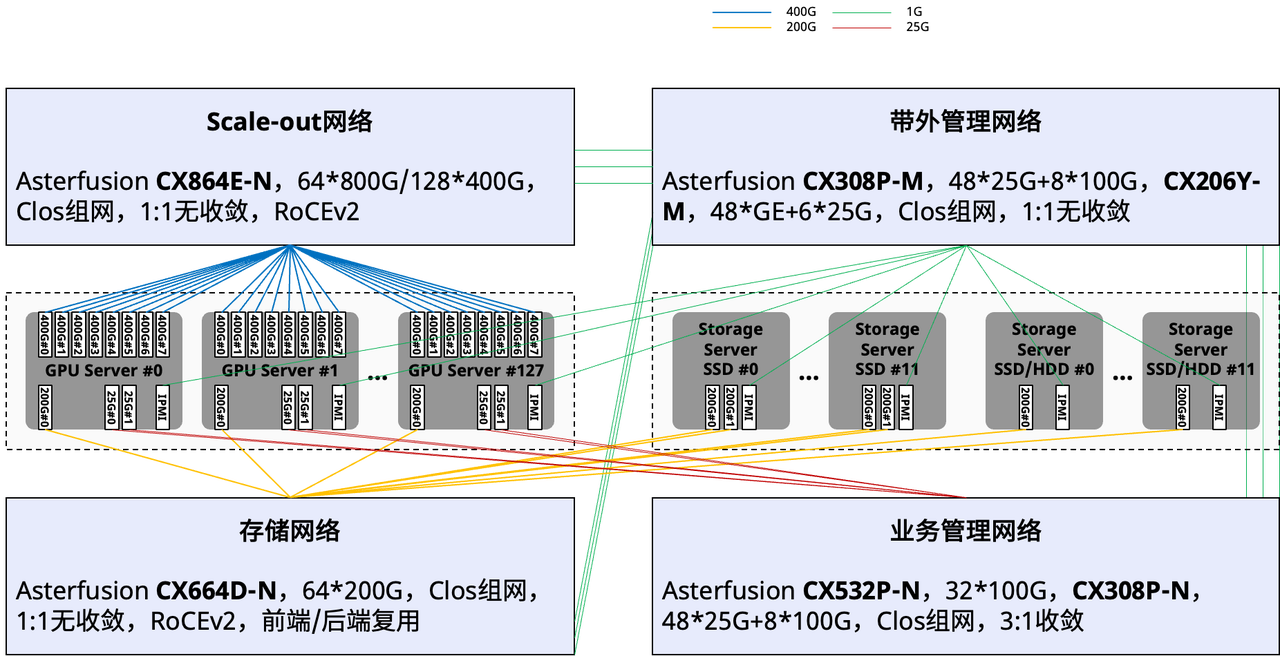

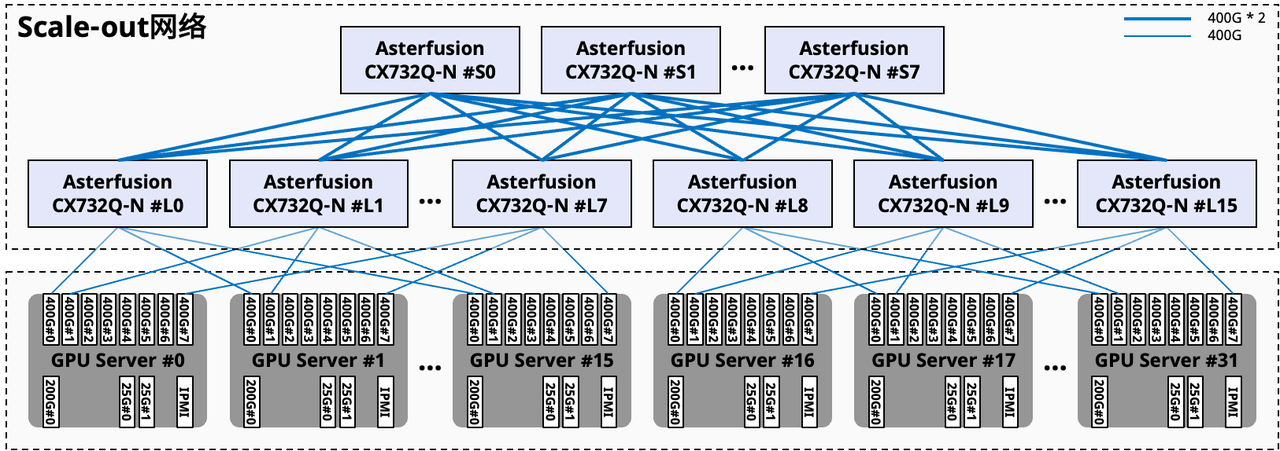

從智算中心方案的整體設計層面來看:GPU服務器集群和存儲服務器集群分別通過計算網絡(Scale-out網絡)和存儲網絡連接。另外兩張管理網中,業務管理網用于GPU服務器互聯,進行AIOS管理面通信,帶外管理則連接整個智算中心的所有設備,用于運維接入管理。

圖1:智算中心方案的概要設計拓撲

圖1:智算中心方案的概要設計拓撲明確了智算中心的整體設計后,我們將對比通用計算服務器與GPU服務器的內部硬件連接拓撲圖,來具體了解GPU服務器的選型邏輯:

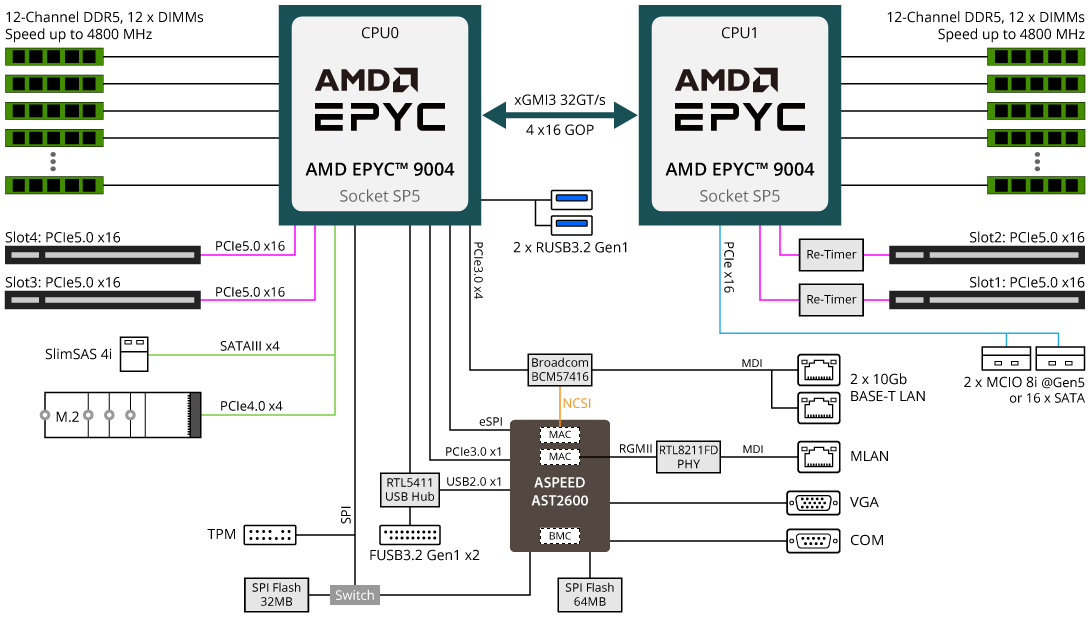

圖2:通用計算服務器內部的硬件連接拓撲

圖2:通用計算服務器內部的硬件連接拓撲 圖3:GPU服務器內部的硬件連接拓撲

圖3:GPU服務器內部的硬件連接拓撲

圖2是一臺通用計算服務器內部的硬件連接拓撲,這臺服務器的核心是兩塊AMD的EPYC CPU,根據IO Chiplet擴展出了若干接口,輔助CPU充分釋放通用計算能力。

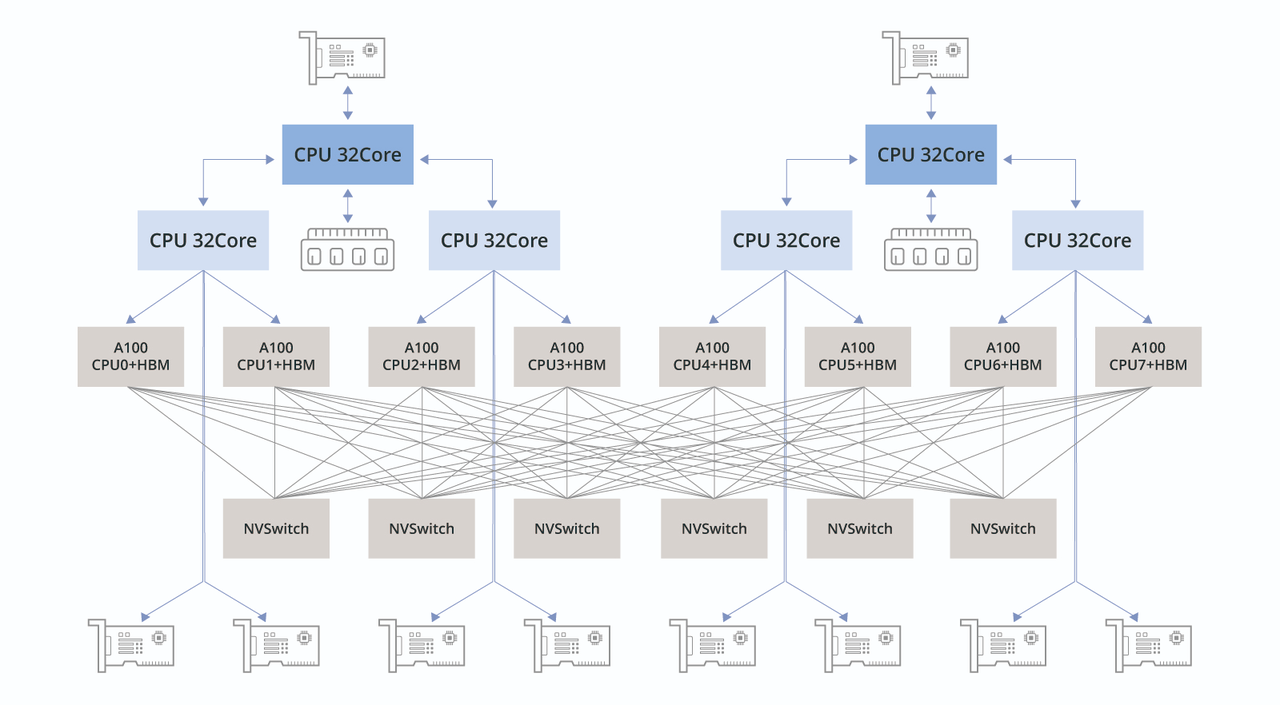

圖3是一臺GPU服務器內部的硬件連接拓撲,這臺服務器配備了8塊A100 GPU,8張用于計算通信的RDMA網卡,以及2張用于存儲通信的RDMA網卡,所有的IO組件設計,都是為了讓這8塊GPU充分釋放算力。

通過上面兩張硬件連接拓撲圖可以看到,通用服務器和GPU服務器從基本的硬件構造上就有著非常大的差異,一個是圍繞通用CPU來構建,另一個是圍繞著GPU來構建的。因此,在硬件選型階段,就需要注意差別,通常來講通用服務器是沒有辦法復用改造成一臺高性能的GPU服務器,PCIe接口數量、服務器空間、散熱設計、電源等方面都不能滿足要求。

當通過計算任務確定算力需求,進而確定了所需要的GPU型號和數量之后,我們也就可以再繼續規劃整個GPU集群的組網了。

由于資源限制,本次實驗驗證中,使用三臺通用服務器稍加改造進行后續的并行訓練和推理測試。

計算節點的硬件配置如下:

CPU:Intel(R) Xeon(R) CPU E5-2678 v3 @ 2.50GHz * 2

GPU:NVIDIA GeForce RTX 4060 Ti 16G * 1

內存:128G

存儲:10T HDD * 2

網卡:MGMT、CX5

其他部分:

散熱:GPU為全高尺寸,但服務器只有2U,所以只能拆掉上蓋板;

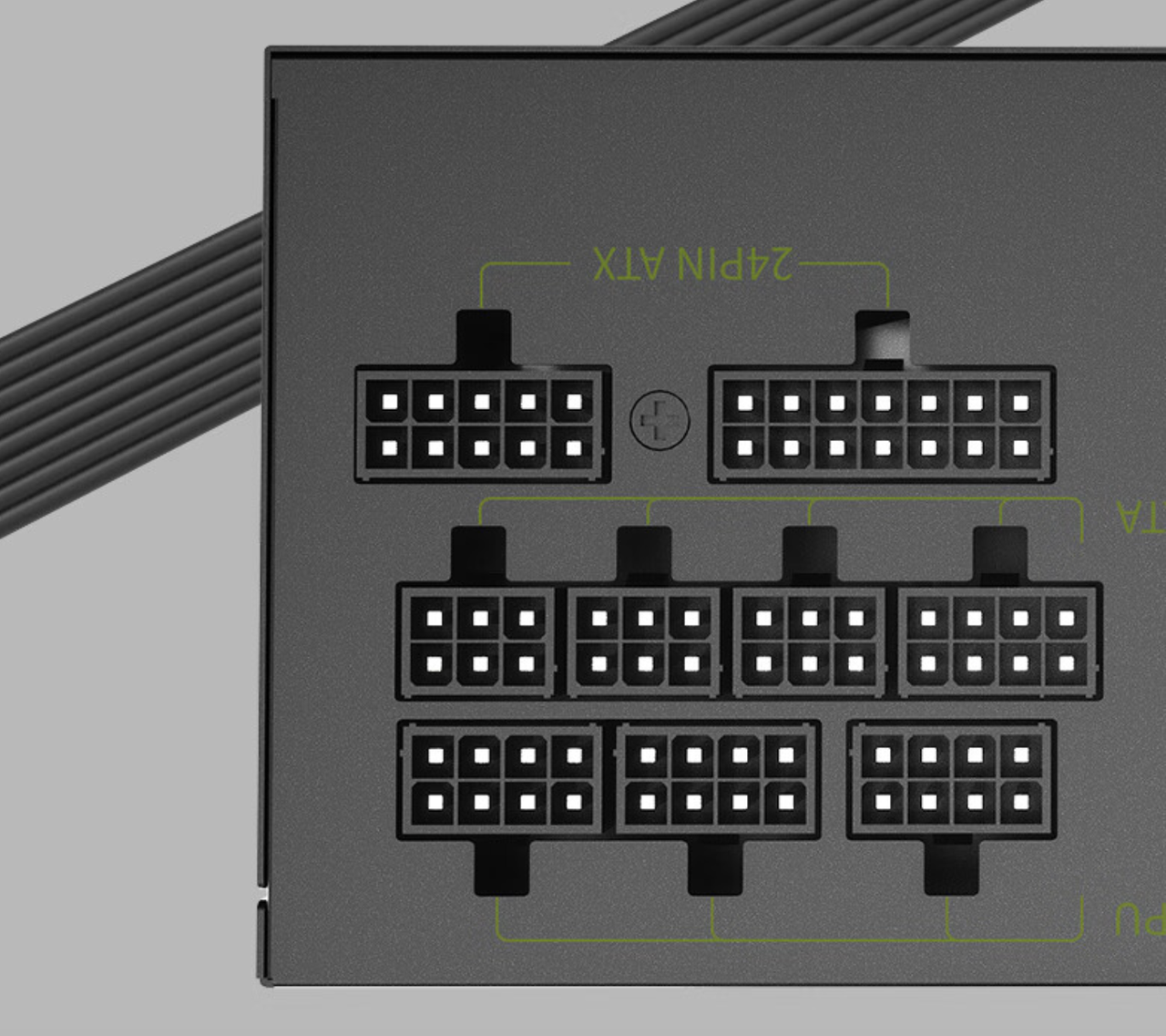

電源:通用服務器通常沒有預留足夠的供電接口,因此需要使用外置電源對GPU進行額外供電;

電源選擇的是Great Wall 額定650W X6,功率上可以同時滿足3塊GPU(RTX4060Ti需要外接150W的供電)的供電要求,并且支持3個8pin接口,用來分別連接三塊GPU。

圖4:電源選型示意圖

圖4:電源選型示意圖 圖5:GPU和RDMA網卡上機安裝后的實拍圖

圖5:GPU和RDMA網卡上機安裝后的實拍圖高性能計算網選型

智算中心的管理網相較于傳統的通用計算數據中心來說,沒有太大差異。比較特殊的就是Scale-out計算網絡和存儲網絡,這兩張網絡承載的業務流量決定了交換機設備的選型需求:支持RDMA、低時延、高吞吐。

如下圖所示,在組網連接方面也有所不同,這里會通過將GPU分組(圖中#L0~7一組,#L8~15一組),組成只有一跳的高帶寬互聯域(HB域),并通過針對智算場景優化的Rail交換機連接,實現了高效的數據傳輸和計算協同。

圖6:組網連接示意

圖6:組網連接示意

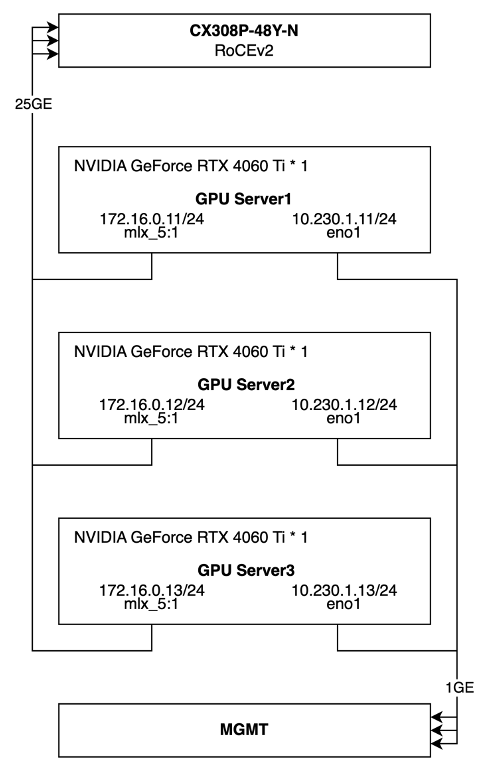

這次實驗驗證中,計算網的交換機選用星融元Asterfusion?? CX-N系列超低時延交換機,具體型號為CX308P-48Y-N。

|

型號 |

業務接口 |

交換容量 |

|

CX864E-N |

64 x 800GE OSFP,2 x 10GE SFP+ |

102.4Tbps |

|

CX732Q-N |

32 x 400GE QSFP-DD, 2 x 10GE SFP+ |

25.6Tbps |

|

CX664D-N |

64 x 200GE QSFP56, 2 x 10GE SFP+ |

25.6Tbps |

|

CX564P-N |

64 x 100GE QSFP28, 2 x 10GE SFP+ |

12.8Tbps |

|

CX532P-N |

32 x 100GE QSFP28, 2 x 10GE SFP+ |

6.4Tbps |

|

CX308P-48Y-N |

48 x 25GE SFP28, 8 x 100GE QSFP28 |

4.0Tbps |

表1:具體型號規格示意

提升大模型訓練效率

CX-N數據中心交換機的單機轉發時延(400ns)低至業界平均水平的1/4~1/5,將網絡時延在AI/ML應用端到端時延中的占比降至最低,同時多維度的高可靠設計確保網絡在任何時候都不中斷,幫助大模型的訓練大幅度降低訓練時間、提升整體效率。

全系列標配RoCEv2能力

區別于傳統廠家多等級License權限管理方式,CX-N數據中心交換機所有應用場景License權限一致,全系列標配RoCEv2能力,提供PFC、ECN、Easy RoCE等一系列面向生產環境的增強網絡特性,用戶無須為此類高級特性額外付出網絡建設成本,幫助用戶獲得更高的ROI。

開放、中立的AI/ML網絡

星融元AI/ML網絡解決方案的開放性確保用戶能夠重用已有的系統(K8s、Prometheus等)對網絡進行管理,無需重復投入;星融元以“中立的網絡供應商參與AI生態”的理念為用戶提供專業的網絡方案,幫助用戶規避“全棧方案鎖定”的風險。

最終,實驗環節的組網拓撲和基礎配置如下所示。

圖7:實驗拓撲和基礎配置示意

圖7:實驗拓撲和基礎配置示意

軟件準備

以上,我們已經完成了硬件選型,接下來我們將進行軟件層面的配置:部署 RoCEv2 交換機、配置GPU 服務器、安裝 GPU 驅動和集合通訊庫。

RoCEv2交換機

圖8:CX308P-48Y-N設備圖

圖8:CX308P-48Y-N設備圖

本次并行訓練的環境中設備數量較少,組網相對簡單:

1. 將CX5網卡的25GE業務接口連接到CX308P;

2. 在交換機上一鍵啟用全局RoCE的無損配置;

3. 將三個25G業務口劃分到一個VLAN下組成一個二層網絡;

如前文提到,CX-N數據中心交換機全系列標配RoCEv2能力,配合星融元AsterNOS網絡操作系統,只需要兩行命令行便可配置所有必要的QoS規則和參數,具體命令行如下:

noone@MacBook-Air ~ % ssh admin@10.230.1.17

Linux AsterNOS 5.10.0-8-2-amd64 #1 SMP Debian 5.10.46-4 (2021-08-03) x86_64

_ _ _ _ ___ ____

/ ___ | |_ ___ _ __ | | | / _ / ___|

/ _ / __|| __| / _ | '__|| | || | | |___

/ ___ __ | |_ | __/| | | | || |_| | ___) |

/_/ _|___/ __| ___||_| |_| _| ___/ |____/

------- Asterfusion Network Operating System -------

Help: http://www.asterfusion.com/

Last login: Sun Sep 29 17:10:46 2024 from 172.16.20.241

AsterNOS# configure terminal

AsterNOS(config)# qos roce lossless

AsterNOS(config)# qos service-policy roce_lossless

AsterNOS(config)# end

AsterNOS# show qos roce

operational description

------------------ ------------- ---------------------------------------------------

status bind qos roce binding status

mode lossless Roce Mode

cable-length 40m Cable Length(in meters) for Roce Lossless Config

congestion-control

- congestion-mode ECN congestion-control

- enabled-tc 3,4 Congestion config enabled Traffic Class

- max-threshold 750000 Congestion config max-threshold

- min-threshold 15360 Congestion config max-threshold

pfc

- pfc-priority 3,4 switch-prio on which PFC is enabled

- rx-enabled enable PFC Rx Enabled status

- tx-enabled enable PFC Tx Enabled status

trust

- trust-mode dscp Trust Setting on the port for packet classification

RoCE DSCP->SP mapping configurations

==========================================

dscp switch-prio

----------------------- -------------

0,1,2,3,4,5,6,7 0

10,11,12,13,14,15,8,9 1

16,17,18,19,20,21,22,23 2

24,25,26,27,28,29,30,31 3

32,33,34,35,36,37,38,39 4

40,41,42,43,44,45,46,47 5

48,49,50,51,52,53,54,55 6

56,57,58,59,60,61,62,63 7

RoCE SP->TC mapping and ETS configurations

================================================

switch-prio mode weight

------------- ------ --------

6 SP

7 SP

RoCE pool config

======================

name switch-prio

----------------------- -------------

egress_lossy_profile 0 1 2 5 6

ingress_lossy_profile 0 1 2 5 6

egress_lossless_profile 3 4

roce_lossless_profile 3 4

GPU服務器基礎配置

以下所有操作,在三臺服務器上都需要執行,本文檔中的配置步驟以server3為例。

關閉防火墻和SELinux

[root@server3 ~]# systemctl stop firewalld

[root@server3 ~]# systemctl disable firewalld

[root@server3 ~]# setenforce 0

[root@server3 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux

配置服務器間免密登陸

[root@server3 ~]# ssh-keygen

[root@server3 ~]# ssh-copy-id root@server1

[root@server3 ~]# ssh-copy-id root@server2

配置服務器軟件源

[root@server3 ~]# ll /etc/yum.repos.d/

總用量 80

-rw-r--r-- 1 root root 2278 9月 19 08:00 CentOS-Base.repo

-rw-r--r-- 1 root root 232 9月 19 08:00 cuda-rhel7.repo

-rw-r--r-- 1 root root 210 9月 19 08:00 cudnn-local-rhel7-8.9.7.29.repo

drwxr-xr-x 2 root root 4096 9月 19 07:58 disable.d

-rw-r--r-- 1 root root 664 9月 19 08:00 epel.repo

-rw-r--r-- 1 root root 381 9月 19 08:00 hashicorp.repo

-rw-r--r-- 1 root root 218 9月 19 08:00 kubernetes.repo

-rw-r--r-- 1 root root 152 9月 19 08:00 MariaDB.repo

-rw-r--r-- 1 root root 855 9月 19 08:00 remi-modular.repo

-rw-r--r-- 1 root root 456 9月 19 08:00 remi-php54.repo

-rw-r--r-- 1 root root 1314 9月 19 08:00 remi-php70.repo

-rw-r--r-- 1 root root 1314 9月 19 08:00 remi-php71.repo

-rw-r--r-- 1 root root 1314 9月 19 08:00 remi-php72.repo

-rw-r--r-- 1 root root 1314 9月 19 08:00 remi-php73.repo

-rw-r--r-- 1 root root 1314 9月 19 08:00 remi-php74.repo

-rw-r--r-- 1 root root 1314 9月 19 08:00 remi-php80.repo

-rw-r--r-- 1 root root 1314 9月 19 08:00 remi-php81.repo

-rw-r--r-- 1 root root 1314 9月 19 08:00 remi-php82.repo

-rw-r--r-- 1 root root 2605 9月 19 08:00 remi.repo

-rw-r--r-- 1 root root 750 9月 19 08:00 remi-safe.repo

[root@server3 ~]# more /etc/yum.repos.d/*.repo

::::::::::::::

/etc/yum.repos.d/CentOS-Base.repo

::::::::::::::

# CentOS-Base.repo

#

# The mirror system uses the connecting IP address of the client and the

# update status of each mirror to pick mirrors that are updated to and

# geographically close to the client. You should use this for CentOS updates

# unless you are manually picking other mirrors.

#

# If the mirrorlist= does not work for you, as a fall back you can try the

# remarked out baseurl= line instead.

#

#

[base]

name=CentOS-7 - Base - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/7/os/x86_64/

http://mirrors.aliyuncs.com/centos/7/os/x86_64/

http://mirrors.cloud.aliyuncs.com/centos/7/os/x86_64/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#released updates

[updates]

name=CentOS-7 - Updates - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/7/updates/x86_64/

http://mirrors.aliyuncs.com/centos/7/updates/x86_64/

http://mirrors.cloud.aliyuncs.com/centos/7/updates/x86_64/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#additional packages that may be useful

[extras]

name=CentOS-7 - Extras - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/7/extras/x86_64/

http://mirrors.aliyuncs.com/centos/7/extras/x86_64/

http://mirrors.cloud.aliyuncs.com/centos/7/extras/x86_64/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#additional packages that extend functionality of existing packages

[centosplus]

name=CentOS-7 - Plus - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/7/centosplus/x86_64/

http://mirrors.aliyuncs.com/centos/7/centosplus/x86_64/

http://mirrors.cloud.aliyuncs.com/centos/7/centosplus/x86_64/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#contrib - packages by Centos Users

[contrib]

name=CentOS-7 - Contrib - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/7/contrib/x86_64/

http://mirrors.aliyuncs.com/centos/7/contrib/x86_64/

http://mirrors.cloud.aliyuncs.com/centos/7/contrib/x86_64/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

::::::::::::::

/etc/yum.repos.d/cuda-rhel7.repo

::::::::::::::

[cuda-rhel7-x86_64]

name=cuda-rhel7-x86_64

baseurl=https://developer.download.nvidia.com/compute/cuda/repos/rhel7/x86_64

enabled=1

gpgcheck=1

gpgkey=https://developer.download.nvidia.com/compute/cuda/repos/rhel7/x86_64/D42D0685.pub

::::::::::::::

/etc/yum.repos.d/cudnn-local-rhel7-8.9.7.29.repo

::::::::::::::

[cudnn-local-rhel7-8.9.7.29]

name=cudnn-local-rhel7-8.9.7.29

baseurl=file:///var/cudnn-local-repo-rhel7-8.9.7.29

enabled=1

gpgcheck=1

gpgkey=file:///var/cudnn-local-repo-rhel7-8.9.7.29/90F10142.pub

obsoletes=0

::::::::::::::

/etc/yum.repos.d/epel.repo

::::::::::::::

[epel]

name=Extra Packages for Enterprise Linux 7 - $basearch

baseurl=http://mirrors.aliyun.com/epel/7/$basearch

failovermethod=priority

enabled=1

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

[epel-debuginfo]

name=Extra Packages for Enterprise Linux 7 - $basearch - Debug

baseurl=http://mirrors.aliyun.com/epel/7/$basearch/debug

failovermethod=priority

enabled=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

gpgcheck=0

[epel-source]

name=Extra Packages for Enterprise Linux 7 - $basearch - Source

baseurl=http://mirrors.aliyun.com/epel/7/SRPMS

failovermethod=priority

enabled=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

gpgcheck=0

::::::::::::::

/etc/yum.repos.d/hashicorp.repo

::::::::::::::

[hashicorp]

name=Hashicorp Stable - $basearch

baseurl=https://rpm.releases.hashicorp.com/RHEL/$releasever/$basearch/stable

enabled=0

gpgcheck=1

gpgkey=https://rpm.releases.hashicorp.com/gpg

[hashicorp-test]

name=Hashicorp Test - $basearch

baseurl=https://rpm.releases.hashicorp.com/RHEL/$releasever/$basearch/test

enabled=0

gpgcheck=1

gpgkey=https://rpm.releases.hashicorp.com/gpg

::::::::::::::

/etc/yum.repos.d/kubernetes.repo

::::::::::::::

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.28/rpm/

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.28/rpm/repodata/repomd.xml.key

::::::::::::::

/etc/yum.repos.d/MariaDB.repo

::::::::::::::

[mariadb]

name = MariaDB

baseurl = https://mirror.mariadb.org/yum/11.2/centos74-amd64

gpgkey = https://yum.mariadb.org/RPM-GPG-KEY-MariaDB

gpgcheck = 0

::::::::::::::

/etc/yum.repos.d/remi-modular.repo

::::::::::::::

# Repository: https://rpms.remirepo.net/

# Blog: https://blog.remirepo.net/

# Forum: https://forum.remirepo.net/

[remi-modular]

name=Remi's Modular repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/modular/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/modular/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/modular/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-modular-test]

name=Remi's Modular testing repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/modular-test/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/modular-test/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/modular-test/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

::::::::::::::

/etc/yum.repos.d/remi-php54.repo

::::::::::::::

# This repository only provides PHP 5.4 and its extensions

# NOTICE: common dependencies are in "remi-safe"

[remi-php54]

name=Remi's PHP 5.4 RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/php54/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/php54/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/php54/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

::::::::::::::

/etc/yum.repos.d/remi-php70.repo

::::::::::::::

# This repository only provides PHP 7.0 and its extensions

# NOTICE: common dependencies are in "remi-safe"

[remi-php70]

name=Remi's PHP 7.0 RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/php70/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/php70/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/php70/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php70-debuginfo]

name=Remi's PHP 7.0 RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-php70/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php70-test]

name=Remi's PHP 7.0 test RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/test70/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/test70/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/test70/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php70-test-debuginfo]

name=Remi's PHP 7.0 test RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-test70/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

::::::::::::::

/etc/yum.repos.d/remi-php71.repo

::::::::::::::

# This repository only provides PHP 7.1 and its extensions

# NOTICE: common dependencies are in "remi-safe"

[remi-php71]

name=Remi's PHP 7.1 RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/php71/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/php71/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/php71/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php71-debuginfo]

name=Remi's PHP 7.1 RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-php71/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php71-test]

name=Remi's PHP 7.1 test RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/test71/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/test71/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/test71/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php71-test-debuginfo]

name=Remi's PHP 7.1 test RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-test71/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

::::::::::::::

/etc/yum.repos.d/remi-php72.repo

::::::::::::::

# This repository only provides PHP 7.2 and its extensions

# NOTICE: common dependencies are in "remi-safe"

[remi-php72]

name=Remi's PHP 7.2 RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/php72/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/php72/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/php72/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php72-debuginfo]

name=Remi's PHP 7.2 RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-php72/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php72-test]

name=Remi's PHP 7.2 test RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/test72/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/test72/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/test72/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php72-test-debuginfo]

name=Remi's PHP 7.2 test RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-test72/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

::::::::::::::

/etc/yum.repos.d/remi-php73.repo

::::::::::::::

# This repository only provides PHP 7.3 and its extensions

# NOTICE: common dependencies are in "remi-safe"

[remi-php73]

name=Remi's PHP 7.3 RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/php73/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/php73/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/php73/mirror

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php73-debuginfo]

name=Remi's PHP 7.3 RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-php73/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php73-test]

name=Remi's PHP 7.3 test RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/test73/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/test73/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/test73/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php73-test-debuginfo]

name=Remi's PHP 7.3 test RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-test73/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

::::::::::::::

/etc/yum.repos.d/remi-php74.repo

::::::::::::::

# This repository only provides PHP 7.4 and its extensions

# NOTICE: common dependencies are in "remi-safe"

[remi-php74]

name=Remi's PHP 7.4 RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/php74/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/php74/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/php74/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php74-debuginfo]

name=Remi's PHP 7.4 RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-php74/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php74-test]

name=Remi's PHP 7.4 test RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/test74/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/test74/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/test74/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php74-test-debuginfo]

name=Remi's PHP 7.4 test RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-test74/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

::::::::::::::

/etc/yum.repos.d/remi-php80.repo

::::::::::::::

# This repository only provides PHP 8.0 and its extensions

# NOTICE: common dependencies are in "remi-safe"

[remi-php80]

name=Remi's PHP 8.0 RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/php80/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/php80/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/php80/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php80-debuginfo]

name=Remi's PHP 8.0 RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-php80/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php80-test]

name=Remi's PHP 8.0 test RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/test80/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/test80/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/test80/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php80-test-debuginfo]

name=Remi's PHP 8.0 test RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-test80/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

::::::::::::::

/etc/yum.repos.d/remi-php81.repo

::::::::::::::

# This repository only provides PHP 8.1 and its extensions

# NOTICE: common dependencies are in "remi-safe"

[remi-php81]

name=Remi's PHP 8.1 RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/php81/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/php81/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/php81/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php81-debuginfo]

name=Remi's PHP 8.1 RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-php81/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php81-test]

name=Remi's PHP 8.1 test RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/test81/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/test81/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/test81/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php81-test-debuginfo]

name=Remi's PHP 8.1 test RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-test81/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

::::::::::::::

/etc/yum.repos.d/remi-php82.repo

::::::::::::::

# This repository only provides PHP 8.2 and its extensions

# NOTICE: common dependencies are in "remi-safe"

[remi-php82]

name=Remi's PHP 8.2 RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/php82/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/php82/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/php82/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php82-debuginfo]

name=Remi's PHP 8.2 RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-php82/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php82-test]

name=Remi's PHP 8.2 test RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/test82/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/test82/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/test82/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php82-test-debuginfo]

name=Remi's PHP 8.2 test RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-test82/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

::::::::::::::

/etc/yum.repos.d/remi.repo

::::::::::::::

# Repository: http://rpms.remirepo.net/

# Blog: http://blog.remirepo.net/

# Forum: http://forum.remirepo.net/

[remi]

name=Remi's RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/remi/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/remi/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/remi/mirror

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php55]

name=Remi's PHP 5.5 RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/php55/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/php55/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/php55/mirror

# NOTICE: common dependencies are in "remi-safe"

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php56]

name=Remi's PHP 5.6 RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/php56/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/php56/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/php56/mirror

# NOTICE: common dependencies are in "remi-safe"

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-test]

name=Remi's test RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/test/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/test/mirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/test/mirror

# WARNING: If you enable this repository, you must also enable "remi"

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-debuginfo]

name=Remi's RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-remi/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php55-debuginfo]

name=Remi's PHP 5.5 RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-php55/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-php56-debuginfo]

name=Remi's PHP 5.6 RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-php56/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-test-debuginfo]

name=Remi's test RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-test/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

::::::::::::::

/etc/yum.repos.d/remi-safe.repo

::::::::::::::

# This repository is safe to use with RHEL/CentOS base repository

# it only provides additional packages for the PHP stack

# all dependencies are in base repository or in EPEL

[remi-safe]

name=Safe Remi's RPM repository for Enterprise Linux 7 - $basearch

#baseurl=http://rpms.remirepo.net/enterprise/7/safe/$basearch/

#mirrorlist=https://rpms.remirepo.net/enterprise/7/safe/httpsmirror

mirrorlist=http://cdn.remirepo.net/enterprise/7/safe/mirror

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[remi-safe-debuginfo]

name=Remi's RPM repository for Enterprise Linux 7 - $basearch - debuginfo

baseurl=http://rpms.remirepo.net/enterprise/7/debug-remi/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-remi

[root@server3 ~]#

安裝Python3

準備工作目錄

[root@server3 lichao]# mkdir AIGC

[root@server3 lichao]# cd AIGC/

安裝Python3

安裝編譯環境和依賴包

[root@server3 AIGC]# yum install wget gcc openssl-devel bzip2-devel libffi-devel

[root@server3 AIGC]# yum install openssl11 openssl11-devel openssl-devel

解壓源碼包

[root@server3 AIGC]# tar xvf Python-3.11.9.tar.xz

[root@server3 AIGC]# cd Python-3.11.9

[root@server3 Python-3.11.9]#

設置環境變量

[root@server3 Python-3.11.9]# export CFLAGS=$(pkg-config --cflags openssl11)

[root@server3 Python-3.11.9]# export LDFLAGS=$(pkg-config --libs openssl11)

進行編譯安裝

[root@server3 Python-3.11.9]# mkdir -p /home/lichao/opt/python3.11.9

[root@server3 Python-3.11.9]# ./configure --prefix=/home/lichao/opt/python3.11.9

[root@server3 Python-3.11.9]# make && make install

創建軟鏈接,用于全局訪問

[root@server3 Python-3.11.9]# cd /home/lichao/opt/python3.11.9/

[root@server3 python3.11.9]# ln -s /home/lichao/opt/python3.11.9/bin/python3 /usr/bin/python3

[root@server3 python3.11.9]# ln -s /home/lichao/opt/python3.11.9/bin/pip3 /usr/bin/pip3

[root@server3 python3.11.9]# ll /usr/bin/python3

lrwxrwxrwx 1 root root 41 5月 16 08:32 /usr/bin/python3 -> /home/lichao/opt/python3.11.9/bin/python3

[root@server3 python3.11.9]# ll /usr/bin/pip3

lrwxrwxrwx 1 root root 38 5月 16 08:32 /usr/bin/pip3 -> /home/lichao/opt/python3.11.9/bin/pip3

驗證測試

[root@server3 python3.11.9]# python3

Python 3.11.9 (main, May 16 2024, 08:23:00) [GCC 4.8.5 20150623 (Red Hat 4.8.5-44)] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> exit()

[root@server3 python3.11.9]#

安裝MLNX網卡驅動

下文以CentOS7為例,詳細介紹了Mellanox網卡MLNX_OFED的驅動安裝和固件升級方法。

本次下載的驅動版本為:MLNX_OFED_LINUX-4.7-3.2.9.0-rhel7.6-x86_64.tgz。

[root@server3 ~]# tar –zxvf MLNX_OFED_LINUX-4.7-3.2.9.0-rhel7.6-x86_64.tgz

[root@server3 ~]# cd MLNX_OFED_LINUX-4.7-3.2.9.0-rhel7.6-x86_64

查看當前系統的內核版本

[root@server3 MLNX_OFED_LINUX-4.7-3.2.9.0-rhel7.6-x86_64]# uname -r

3.10.0-957.el7.x86_64

查看當前驅動所支持的內核版本

[root@server3 MLNX_OFED_LINUX-4.7-3.2.9.0-rhel7.6-x86_64]# cat .supported_kernels

3.10.0-957.el7.x86_64

注:由以上可知下載的默認驅動支持當前的內核版本

如果當前內核與支持內核不匹配,手動編譯適合內核的驅動,在編譯之前首先安裝gcc編譯環境和kernel開發包

[root@server3 MLNX_OFED_LINUX-4.7-3.2.9.0-rhel7.6-x86_64]#yum install gcc gcc-c++

libstdc++-devel kernel-default-devel

添加針對當前內核版本的驅動

[root@server3 MLNX_OFED_LINUX-4.7-3.2.9.0-rhel7.6-x86_64]#./mlnx_add_kernel_support.sh -m /root/MLNX_OFED_LINUX-4.7-3.2.9.0-rhel7.6-x86_64 -v

注:完成后生成的驅動文件在/tmp目錄下

[root@server3 MLNX_OFED_LINUX-4.7-3.2.9.0-rhel7.6-x86_64]# ls -l /tmp/MLNX_OFED_LINUX-4.7-3.2.9.0-rhel7.6-x86_64-ext.tgz

-rw-r--r-- 1 root root 282193833 Dec 23 09:49 /tmp/MLNX_OFED_LINUX-4.7-3.2.9.0-rhel7.6-x86_64-ext.tgz

安裝驅動

[root@server3 tmp]# tar xzvf MLNX_OFED_LINUX-4.7-3.2.9.0-rhel7.6-x86_64-ext.tgz

[root@server3 tmp]# cd MLNX_OFED_LINUX-4.7-3.2.9.0-rhel7.6-x86_64-ext

[root@server3 tmp]# ./mlnxofedinstall

最后啟動openibd服務

[root@server3 ~]#/etc/init.d/openibd start

[root@server3 ~]#chkconfig openibd on

安裝GPU驅動和集合通訊庫安裝配置

安裝配置

- 安裝GPU驅動和CUDA、CUDNN

安裝開始前,請根據自己的GPU型號、操作系統版本去英偉達官網下載相對應的軟件包。

[root@server3 AIGC]# ll

總用量 1733448

-rw-r--r-- 1 root root 1430373861 5月 16 08:55 cudnn-local-repo-rhel7-8.9.7.29-1.0-1.x86_64.rpm

drwxr-xr-x 7 root root 141 5月 17 13:45 nccl-tests

-rwxr-xr-x 1 root root 306736632 5月 16 08:43 NVIDIA-Linux-x86_64-550.67.run

drwxrwxr-x 10 1000 1000 4096 5月 17 13:21 openmpi-4.1.6

-rw-r--r-- 1 root root 17751702 9月 30 2023 openmpi-4.1.6.tar.gz

drwxr-xr-x 17 root root 4096 5月 16 08:23 Python-3.11.9

-rw-r--r-- 1 root root 20175816 4月 2 13:11 Python-3.11.9.tar.xz

[root@server3 AIGC]# ./NVIDIA-Linux-x86_64-550.67.run

Verifying archive integrity... OK

Uncompressing NVIDIA Accelerated Graphics Driver for Linux-x86_64 550.67...................

[root@server3 AIGC]# yum-config-manager --add-repo https://developer.download.nvidia.com/compute/cuda/repos/rhel7/x86_64/cuda-rhel7.repo

已加載插件:fastestmirror, nvidia

adding repo from: https://developer.download.nvidia.com/compute/cuda/repos/rhel7/x86_64/cuda-rhel7.repo

grabbing file https://developer.download.nvidia.com/compute/cuda/repos/rhel7/x86_64/cuda-rhel7.repo to /etc/yum.repos.d/cuda-rhel7.repo

repo saved to /etc/yum.repos.d/cuda-rhel7.repo

[root@server3 AIGC]# yum install libnccl-2.21.5-1+cuda12.4 libnccl-devel-2.21.5-1+cuda12.4 libnccl-static-2.21.5-1+cuda12.4

[root@server3 AIGC]# yum install cudnn-local-repo-rhel7-8.9.7.29-1.0-1.x86_64.rpm

安裝完成后,可以通過nvidia-smi查看驅動和CUDA版本。如果版本不匹配,則執行此命令行會報錯。

[root@server3 AIGC]# nvidia-smi

Mon Jun 3 11:59:36 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.67 Driver Version: 550.67 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce RTX 4060 Ti Off | 00000000:02:00.0 Off | N/A |

| 0% 34C P0 27W / 165W | 1MiB / 16380MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

[root@server3 AIGC]#

- 編譯安裝OpenMPI

[root@server3 AIGC]# tar xvf openmpi-4.1.6.tar.gz

[root@server3 openmpi-4.1.6]#

[root@server3 openmpi-4.1.6]# mkdir -p /home/lichao/lib/openmpi

[root@server3 openmpi-4.1.6]# ./configure --prefix=/home/lichao/lib/openmpi -with-cuda=/usr/local/cuda-12.4 -with-nccl=/usr/lib64

Open MPI configuration:

-----------------------

Version: 4.1.6

Build MPI C bindings: yes

Build MPI C++ bindings (deprecated): no

Build MPI Fortran bindings: mpif.h, use mpi

MPI Build Java bindings (experimental): no

Build Open SHMEM support: yes

Debug build: no

Platform file: (none)

Miscellaneous

-----------------------

CUDA support: yes

HWLOC support: internal

Libevent support: internal

Open UCC: no

PMIx support: Internal

Transports

-----------------------

Cisco usNIC: no

Cray uGNI (Gemini/Aries): no

Intel Omnipath (PSM2): no

Intel TrueScale (PSM): no

Mellanox MXM: no

Open UCX: yes

OpenFabrics OFI Libfabric: no

OpenFabrics Verbs: yes

Portals4: no

Shared memory/copy in+copy out: yes

Shared memory/Linux CMA: yes

Shared memory/Linux KNEM: no

Shared memory/XPMEM: no

TCP: yes

Resource Managers

-----------------------

Cray Alps: no

Grid Engine: no

LSF: no

Moab: no

Slurm: yes

ssh/rsh: yes

Torque: no

OMPIO File Systems

-----------------------

DDN Infinite Memory Engine: no

Generic Unix FS: yes

IBM Spectrum Scale/GPFS: no

Lustre: no

PVFS2/OrangeFS: no

[root@server3 openmpi-4.1.6]#

- 編譯安裝NCCL-Test

[root@server3 lichao]# cd AIGC/

[root@server3 AIGC]# git clone https://github.com/NVIDIA/nccl-tests.git

[root@server3 AIGC]# cd nccl-tests/

[root@server3 nccl-tests]# make clean

[root@server3 nccl-tests]# make MPI=1 MPI_HOME=/home/lichao/opt/openmpi/ CUDA_HOME=/usr/local/cuda-12.4/ NCCL_HOME=/usr/lib64/

集合通信性能測試方法(all_reduce)

[root@server1 lichao]# cat run_nccl-test.sh

/home/lichao/opt/openmpi/bin/mpirun --allow-run-as-root

-np 3

-host "server1,server2,server3"

-mca btl ^openib

-x NCCL_DEBUG=INFO

-x NCCL_ALGO=ring

-x NCCL_IB_DISABLE=0

-x NCCL_IB_GID_INDEX=3

-x NCCL_SOCKET_IFNAME=ens11f1

-x NCCL_IB_HCA=mlx5_1:1

/home/lichao/AIGC/nccl-tests/build/all_reduce_perf -b 128 -e 8G -f 2 -g 1

[root@server1 lichao]# ./run_nccl-test.sh

# nThread 1 nGpus 1 minBytes 128 maxBytes 8589934592 step: 2(factor) warmup iters: 5 iters: 20 agg iters: 1 validation: 1 graph: 0

#

# Using devices

# Rank 0 Group 0 Pid 18697 on server1 device 0 [0x02] NVIDIA GeForce RTX 4060 Ti

# Rank 1 Group 0 Pid 20893 on server2 device 0 [0x02] NVIDIA GeForce RTX 4060 Ti

# Rank 2 Group 0 Pid 2458 on server3 device 0 [0x02] NVIDIA GeForce RTX 4060 Ti

#

# Reducing maxBytes to 5261099008 due to memory limitation

server1:18697:18697 [0] NCCL INFO NCCL_SOCKET_IFNAME set by environment to ens11f1

server1:18697:18697 [0] NCCL INFO Bootstrap : Using ens11f1:172.16.0.11

server1:18697:18697 [0] NCCL INFO NET/Plugin: No plugin found (libnccl-net.so)

server1:18697:18697 [0] NCCL INFO NET/Plugin: Plugin load returned 2 : libnccl-net.so: cannot open shared object file: No such file or directory : when loading libnccl-net.so

server1:18697:18697 [0] NCCL INFO NET/Plugin: Using internal network plugin.

server2:20893:20893 [0] NCCL INFO cudaDriverVersion 12040

server2:20893:20893 [0] NCCL INFO NCCL_SOCKET_IFNAME set by environment to ens11f1

server2:20893:20893 [0] NCCL INFO Bootstrap : Using ens11f1:172.16.0.12

server2:20893:20893 [0] NCCL INFO NET/Plugin: No plugin found (libnccl-net.so)

server2:20893:20893 [0] NCCL INFO NET/Plugin: Plugin load returned 2 : libnccl-net.so: cannot open shared object file: No such file or directory : when loading libnccl-net.so

server2:20893:20893 [0] NCCL INFO NET/Plugin: Using internal network plugin.

server1:18697:18697 [0] NCCL INFO cudaDriverVersion 12040

NCCL version 2.21.5+cuda12.4

server3:2458:2458 [0] NCCL INFO cudaDriverVersion 12040

server3:2458:2458 [0] NCCL INFO NCCL_SOCKET_IFNAME set by environment to ens11f1

server3:2458:2458 [0] NCCL INFO Bootstrap : Using ens11f1:172.16.0.13

server3:2458:2458 [0] NCCL INFO NET/Plugin: No plugin found (libnccl-net.so)

server3:2458:2458 [0] NCCL INFO NET/Plugin: Plugin load returned 2 : libnccl-net.so: cannot open shared object file: No such file or directory : when loading libnccl-net.so

server3:2458:2458 [0] NCCL INFO NET/Plugin: Using internal network plugin.

server2:20893:20907 [0] NCCL INFO NCCL_IB_DISABLE set by environment to 0.

server2:20893:20907 [0] NCCL INFO NCCL_SOCKET_IFNAME set by environment to ens11f1

server2:20893:20907 [0] NCCL INFO NCCL_IB_HCA set to mlx5_1:1

server2:20893:20907 [0] NCCL INFO NET/IB : Using [0]mlx5_1:1/RoCE [RO]; OOB ens11f1:172.16.0.12

server2:20893:20907 [0] NCCL INFO Using non-device net plugin version 0

server2:20893:20907 [0] NCCL INFO Using network IB

server3:2458:2473 [0] NCCL INFO NCCL_IB_DISABLE set by environment to 0.

server3:2458:2473 [0] NCCL INFO NCCL_SOCKET_IFNAME set by environment to ens11f1

server3:2458:2473 [0] NCCL INFO NCCL_IB_HCA set to mlx5_1:1

server1:18697:18712 [0] NCCL INFO NCCL_IB_DISABLE set by environment to 0.

server1:18697:18712 [0] NCCL INFO NCCL_SOCKET_IFNAME set by environment to ens11f1

server3:2458:2473 [0] NCCL INFO NET/IB : Using [0]mlx5_1:1/RoCE [RO]; OOB ens11f1:172.16.0.13

server1:18697:18712 [0] NCCL INFO NCCL_IB_HCA set to mlx5_1:1

server3:2458:2473 [0] NCCL INFO Using non-device net plugin version 0

server3:2458:2473 [0] NCCL INFO Using network IB

server1:18697:18712 [0] NCCL INFO NET/IB : Using [0]mlx5_1:1/RoCE [RO]; OOB ens11f1:172.16.0.11

server1:18697:18712 [0] NCCL INFO Using non-device net plugin version 0

server1:18697:18712 [0] NCCL INFO Using network IB

server1:18697:18712 [0] NCCL INFO ncclCommInitRank comm 0x23622c0 rank 0 nranks 3 cudaDev 0 nvmlDev 0 busId 2000 commId 0x35491327c8228dd0 - Init START

server3:2458:2473 [0] NCCL INFO ncclCommInitRank comm 0x346ffc0 rank 2 nranks 3 cudaDev 0 nvmlDev 0 busId 2000 commId 0x35491327c8228dd0 - Init START

server2:20893:20907 [0] NCCL INFO ncclCommInitRank comm 0x2a1af20 rank 1 nranks 3 cudaDev 0 nvmlDev 0 busId 2000 commId 0x35491327c8228dd0 - Init START

server3:2458:2473 [0] NCCL INFO Setting affinity for GPU 0 to 0f,ff000fff

server2:20893:20907 [0] NCCL INFO Setting affinity for GPU 0 to 0f,ff000fff

server1:18697:18712 [0] NCCL INFO Setting affinity for GPU 0 to 0f,ff000fff

server1:18697:18712 [0] NCCL INFO comm 0x23622c0 rank 0 nRanks 3 nNodes 3 localRanks 1 localRank 0 MNNVL 0

server1:18697:18712 [0] NCCL INFO Channel 00/02 : 0 1 2

server1:18697:18712 [0] NCCL INFO Channel 01/02 : 0 1 2

server1:18697:18712 [0] NCCL INFO Trees [0] 2/-1/-1->0->-1 [1] 2/-1/-1->0->1

server1:18697:18712 [0] NCCL INFO P2P Chunksize set to 131072

server3:2458:2473 [0] NCCL INFO comm 0x346ffc0 rank 2 nRanks 3 nNodes 3 localRanks 1 localRank 0 MNNVL 0

server2:20893:20907 [0] NCCL INFO comm 0x2a1af20 rank 1 nRanks 3 nNodes 3 localRanks 1 localRank 0 MNNVL 0

server3:2458:2473 [0] NCCL INFO Trees [0] 1/-1/-1->2->0 [1] -1/-1/-1->2->0

server3:2458:2473 [0] NCCL INFO P2P Chunksize set to 131072

server2:20893:20907 [0] NCCL INFO Trees [0] -1/-1/-1->1->2 [1] 0/-1/-1->1->-1

server2:20893:20907 [0] NCCL INFO P2P Chunksize set to 131072

server3:2458:2473 [0] NCCL INFO Channel 00/0 : 1[0] -> 2[0] [receive] via NET/IB/0

server3:2458:2473 [0] NCCL INFO Channel 01/0 : 1[0] -> 2[0] [receive] via NET/IB/0

server3:2458:2473 [0] NCCL INFO Channel 00/0 : 2[0] -> 0[0] [send] via NET/IB/0

server3:2458:2473 [0] NCCL INFO Channel 01/0 : 2[0] -> 0[0] [send] via NET/IB/0

server2:20893:20907 [0] NCCL INFO Channel 00/0 : 0[0] -> 1[0] [receive] via NET/IB/0

server2:20893:20907 [0] NCCL INFO Channel 01/0 : 0[0] -> 1[0] [receive] via NET/IB/0

server2:20893:20907 [0] NCCL INFO Channel 00/0 : 1[0] -> 2[0] [send] via NET/IB/0

server2:20893:20907 [0] NCCL INFO Channel 01/0 : 1[0] -> 2[0] [send] via NET/IB/0

server1:18697:18712 [0] NCCL INFO Channel 00/0 : 2[0] -> 0[0] [receive] via NET/IB/0

server1:18697:18712 [0] NCCL INFO Channel 01/0 : 2[0] -> 0[0] [receive] via NET/IB/0

server1:18697:18712 [0] NCCL INFO Channel 00/0 : 0[0] -> 1[0] [send] via NET/IB/0

server1:18697:18712 [0] NCCL INFO Channel 01/0 : 0[0] -> 1[0] [send] via NET/IB/0

server3:2458:2475 [0] NCCL INFO NCCL_IB_GID_INDEX set by environment to 3.

server1:18697:18714 [0] NCCL INFO NCCL_IB_GID_INDEX set by environment to 3.

server2:20893:20909 [0] NCCL INFO NCCL_IB_GID_INDEX set by environment to 3.

server1:18697:18712 [0] NCCL INFO Connected all rings

server1:18697:18712 [0] NCCL INFO Channel 01/0 : 1[0] -> 0[0] [receive] via NET/IB/0

server3:2458:2473 [0] NCCL INFO Connected all rings

server2:20893:20907 [0] NCCL INFO Connected all rings

server1:18697:18712 [0] NCCL INFO Channel 00/0 : 0[0] -> 2[0] [send] via NET/IB/0

server2:20893:20907 [0] NCCL INFO Channel 00/0 : 2[0] -> 1[0] [receive] via NET/IB/0

server1:18697:18712 [0] NCCL INFO Channel 01/0 : 0[0] -> 2[0] [send] via NET/IB/0

server3:2458:2473 [0] NCCL INFO Channel 00/0 : 0[0] -> 2[0] [receive] via NET/IB/0

server2:20893:20907 [0] NCCL INFO Channel 01/0 : 1[0] -> 0[0] [send] via NET/IB/0

server3:2458:2473 [0] NCCL INFO Channel 01/0 : 0[0] -> 2[0] [receive] via NET/IB/0

server3:2458:2473 [0] NCCL INFO Channel 00/0 : 2[0] -> 1[0] [send] via NET/IB/0

server3:2458:2473 [0] NCCL INFO Connected all trees

server1:18697:18712 [0] NCCL INFO Connected all trees

server1:18697:18712 [0] NCCL INFO NCCL_ALGO set by environment to ring

server3:2458:2473 [0] NCCL INFO NCCL_ALGO set by environment to ring

server3:2458:2473 [0] NCCL INFO threadThresholds 8/8/64 | 24/8/64 | 512 | 512

server3:2458:2473 [0] NCCL INFO 2 coll channels, 2 collnet channels, 0 nvls channels, 2 p2p channels, 2 p2p channels per peer

server2:20893:20907 [0] NCCL INFO Connected all trees

server2:20893:20907 [0] NCCL INFO NCCL_ALGO set by environment to ring

server2:20893:20907 [0] NCCL INFO threadThresholds 8/8/64 | 24/8/64 | 512 | 512

server2:20893:20907 [0] NCCL INFO 2 coll channels, 2 collnet channels, 0 nvls channels, 2 p2p channels, 2 p2p channels per peer

server1:18697:18712 [0] NCCL INFO threadThresholds 8/8/64 | 24/8/64 | 512 | 512

server1:18697:18712 [0] NCCL INFO 2 coll channels, 2 collnet channels, 0 nvls channels, 2 p2p channels, 2 p2p channels per peer

server2:20893:20907 [0] NCCL INFO TUNER/Plugin: Plugin load returned 11 : libnccl-net.so: cannot open shared object file: No such file or directory : when loading libnccl-tuner.so

server2:20893:20907 [0] NCCL INFO TUNER/Plugin: Using internal tuner plugin.

server2:20893:20907 [0] NCCL INFO ncclCommInitRank comm 0x2a1af20 rank 1 nranks 3 cudaDev 0 nvmlDev 0 busId 2000 commId 0x35491327c8228dd0 - Init COMPLETE

server3:2458:2473 [0] NCCL INFO TUNER/Plugin: Plugin load returned 11 : libnccl-net.so: cannot open shared object file: No such file or directory : when loading libnccl-tuner.so

server3:2458:2473 [0] NCCL INFO TUNER/Plugin: Using internal tuner plugin.

server3:2458:2473 [0] NCCL INFO ncclCommInitRank comm 0x346ffc0 rank 2 nranks 3 cudaDev 0 nvmlDev 0 busId 2000 commId 0x35491327c8228dd0 - Init COMPLETE

server1:18697:18712 [0] NCCL INFO TUNER/Plugin: Plugin load returned 11 : libnccl-net.so: cannot open shared object file: No such file or directory : when loading libnccl-tuner.so

server1:18697:18712 [0] NCCL INFO TUNER/Plugin: Using internal tuner plugin.

server1:18697:18712 [0] NCCL INFO ncclCommInitRank comm 0x23622c0 rank 0 nranks 3 cudaDev 0 nvmlDev 0 busId 2000 commId 0x35491327c8228dd0 - Init COMPLETE

#

# out-of-place in-place

# size count type redop root time algbw busbw #wrong time algbw busbw #wrong

# (B) (elements) (us) (GB/s) (GB/s) (us) (GB/s) (GB/s)

128 32 float sum -1 28.39 0.00 0.01 0 27.35 0.00 0.01 0

256 64 float sum -1 29.44 0.01 0.01 0 28.54 0.01 0.01 0

512 128 float sum -1 29.99 0.02 0.02 0 29.66 0.02 0.02 0

1024 256 float sum -1 32.89 0.03 0.04 0 30.64 0.03 0.04 0

2048 512 float sum -1 34.81 0.06 0.08 0 31.87 0.06 0.09 0

4096 1024 float sum -1 37.32 0.11 0.15 0 36.09 0.11 0.15 0

8192 2048 float sum -1 45.11 0.18 0.24 0 43.12 0.19 0.25 0

16384 4096 float sum -1 57.92 0.28 0.38 0 56.98 0.29 0.38 0

32768 8192 float sum -1 72.68 0.45 0.60 0 70.79 0.46 0.62 0

65536 16384 float sum -1 95.77 0.68 0.91 0 93.73 0.70 0.93 0

131072 32768 float sum -1 162.7 0.81 1.07 0 161.5 0.81 1.08 0

262144 65536 float sum -1 177.3 1.48 1.97 0 177.4 1.48 1.97 0

524288 131072 float sum -1 301.4 1.74 2.32 0 302.0 1.74 2.31 0

1048576 262144 float sum -1 557.9 1.88 2.51 0 559.2 1.88 2.50 0

2097152 524288 float sum -1 1089.8 1.92 2.57 0 1092.2 1.92 2.56 0

4194304 1048576 float sum -1 2165.7 1.94 2.58 0 2166.6 1.94 2.58 0

8388608 2097152 float sum -1 4315.7 1.94 2.59 0 4316.1 1.94 2.59 0

16777216 4194304 float sum -1 8528.8 1.97 2.62 0 8529.3 1.97 2.62 0

33554432 8388608 float sum -1 16622 2.02 2.69 0 16610 2.02 2.69 0

67108864 16777216 float sum -1 32602 2.06 2.74 0 32542 2.06 2.75 0

134217728 33554432 float sum -1 63946 2.10 2.80 0 63831 2.10 2.80 0

268435456 67108864 float sum -1 126529 2.12 2.83 0 126412 2.12 2.83 0

536870912 134217728 float sum -1 251599 2.13 2.85 0 251327 2.14 2.85 0

1073741824 268435456 float sum -1 500664 2.14 2.86 0 501911 2.14 2.85 0

2147483648 536870912 float sum -1 1001415 2.14 2.86 0 1000178 2.15 2.86 0

4294967296 1073741824 float sum -1 1999361 2.15 2.86 0 1997380 2.15 2.87 0

server1:18697:18697 [0] NCCL INFO comm 0x23622c0 rank 0 nranks 3 cudaDev 0 busId 2000 - Destroy COMPLETE

server2:20893:20893 [0] NCCL INFO comm 0x2a1af20 rank 1 nranks 3 cudaDev 0 busId 2000 - Destroy COMPLETE

server3:2458:2458 [0] NCCL INFO comm 0x346ffc0 rank 2 nranks 3 cudaDev 0 busId 2000 - Destroy COMPLETE

# Out of bounds values : 0 OK

# Avg bus bandwidth : 1.66163

#

[root@server1 lichao]#

結果詳解

- size (B):操作處理的數據的大小,以字節為單位;

- count (elements):操作處理的元素的數量;

- type:元素的數據類型;

- redop:使用的歸約操作;

- root:對于某些操作(如 reduce 和 broadcast),這列指定了根節點的編號,值是 -1 表示這個操作沒有根節點(all-reduce 操作涉及到所有的節點);

- time (us):操作的執行時間,以微秒為單位;

- algbw (GB/s):算法帶寬,以每秒吉字節(GB/s)為單位;

- busbw (GB/s):總線帶寬,以每秒吉字節(GB/s)為單位;

- wrong:錯誤的數量,如果這個值不是 0,那可能表示有一些錯誤發生。

在這個例子中,你可以看到,當處理的數據量增大時,算法帶寬和總線帶寬都有所提高,這可能表示 NCCL 能夠有效地利用大量的數據。

查看結果時,需要關注如下幾點:

1. 數據量增加時,帶寬是否會下降(下降明顯不符合預期);

2. 更關注帶寬的峰值,每次算到的帶寬峰值,可以只關注 in 或者 out;

3. 平均值,在數據量遞增的情況下,可能無法體現最終的結果;

4. 請確保數據量足夠大,可以壓到帶寬上限(通過調整 b、e 或者 n 選項)。

常用參數及解釋

- GPU 數量

- -t,--nthreads 每個進程的線程數量配置, 默認 1;

- -g,--ngpus 每個線程的 GPU 數量,默認 1;

- 數據大小配置

- -b,--minbytes 開始的最小數據量,默認 32M;

- -e,--maxbytes 結束的最大數據量,默認 32M;

- 數據步長設置

- -i,--stepbytes 每次增加的數據量,默認: 1M;

- -f,--stepfactor 每次增加的倍數,默認禁用;

- NCCL 操作相關配置

- -o,--op 指定那種操作為reduce,僅適用于Allreduce、Reduce或ReduceScatter等縮減操作。默認值為:求和(Sum);

- -d,--datatype 指定使用哪種數據類型,默認 : Float;

- 性能相關配置

- -n,--iters 每次操作(一次發送)循環多少次,默認 : 20;

- -w,--warmup_iters 預熱迭代次數(不計時),默認:5;

- -m,--agg_iters 每次迭代中要聚合在一起的操作數,默認:1;

- -a,--average <0/1/2/3> 在所有 ranks 計算均值作為最終結果 (MPI=1 only). <0=Rank0,1=Avg,2=Min,3=Max>,默認:1;

- 測試相關配置

- -p,--parallel_init <0/1> 使用線程并行初始化 NCCL,默認: 0;

- -c,--check <0/1> 檢查結果的正確性。在大量GPU上可能會非常慢,默認:1;

- -z,--blocking <0/1> 使NCCL集合阻塞,即在每個集合之后讓CPU等待和同步,默認:0;

- -G,--cudagraph 將迭代作為CUDA圖形捕獲,然后重復指定的次數,默認:0;

實驗測試

完成硬件、軟件的選型和配置后,下一步將進行實踐測試。

獲取LLaMA-Factory源碼包

因為網絡問題很難直接通過git clone命令行拉取,建議通過打包下載后自己上傳的方式進行:

noone@MacBook-Air Downloads % scp LLaMA-Factory-0.8.3.zip root@10.230.1.13:/tmp

[root@server3 AIGC]# pwd

/home/lichao/AIGC

[root@server3 AIGC]# cp /tmp/LLaMA-Factory-0.8.3.zip ./

[root@server3 AIGC]# unzip LLaMA-Factory-0.8.3.zip

[root@server3 AIGC]# cd LLaMA-Factory-0.8.3

[root@server3 LLaMA-Factory-0.8.3]# ll

總用量 128

drwxr-xr-x 2 root root 83 9月 13 05:04 assets

drwxr-xr-x 2 root root 122 9月 6 08:26 cache

-rw-r--r-- 1 root root 1378 7月 18 19:36 CITATION.cff

drwxr-xr-x 6 root root 4096 9月 13 05:03 data

drwxr-xr-x 4 root root 43 7月 18 19:36 docker

drwxr-xr-x 5 root root 44 7月 18 19:36 evaluation

drwxr-xr-x 10 root root 182 7月 18 19:36 examples

-rw-r--r-- 1 root root 11324 7月 18 19:36 LICENSE

-rw-r--r-- 1 root root 242 7月 18 19:36 Makefile

-rw-r--r-- 1 root root 33 7月 18 19:36 MANIFEST.in

-rw-r--r-- 1 root root 645 7月 18 19:36 pyproject.toml

-rw-r--r-- 1 root root 44424 7月 18 19:36 README.md

-rw-r--r-- 1 root root 44093 7月 18 19:36 README_zh.md

-rw-r--r-- 1 root root 245 7月 18 19:36 requirements.txt

drwxr-xr-x 3 root root 16 9月 6 18:48 saves

drwxr-xr-x 2 root root 219 7月 18 19:36 scripts

-rw-r--r-- 1 root root 3361 7月 18 19:36 setup.py

drwxr-xr-x 4 root root 101 9月 6 08:22 src

drwxr-xr-x 5 root root 43 7月 18 19:36 tests

[root@server3 LLaMA-Factory-0.8.3]#

安裝LLaMA-Factory,并進行驗證

[root@server3 LLaMA-Factory-0.8.3]# pip install -e ".[torch,metrics]"

[root@server3 LLaMA-Factory-0.8.3]# llamafactory-cli version

[2024-09-23 08:51:28,722] [INFO] [real_accelerator.py:203:get_accelerator] Setting ds_accelerator to cuda (auto detect)

----------------------------------------------------------

| Welcome to LLaMA Factory, version 0.8.3 |

| |

| Project page: https://github.com/hiyouga/LLaMA-Factory |

----------------------------------------------------------

[root@server3 LLaMA-Factory-0.8.3]#

下載訓練時所需的預訓練模型和數據集

根據當前GPU服務器所配置的GPU硬件規格,選擇適合的訓練方法、模型和數據集。

GPU型號:NVIDIA GeForce RTX 4060 Ti 16GB

預訓練模型:Qwen/Qwen1.5-0.5B-Chat

數據集:identity、alpaca_zh_demo

# Make sure you have git-lfs installed (https://git-lfs.com)

git lfs install

git clone https://hf-mirror.com/Qwen/Qwen1.5-0.5B-Chat

# If you want to clone without large files - just their pointers

GIT_LFS_SKIP_SMUDGE=1 git clone https://hf-mirror.com/Qwen/Qwen1.5-0.5B-Chat

因為網絡問題通過命令行很難直接下載,這里使用huggingface的國內鏡像站拉取預訓練模型數據,并使用“GIT_LFS_SKIP_SMUDGE=1”變量跳過大文件,隨后手工下載后再上傳。

如果覺得麻煩,也可以安裝使用huggingface的命令行工具,下載預訓練模型和數據集。同樣地,安裝完成后,需要配置一些環境變量(使用鏡像站hf-mirror.com)來解決網絡問題。

1. 安裝依賴

[root@server3 LLaMA-Factory-0.8.3]# pip3 install -U huggingface_hub

2. 設置環境變量

[root@server3 LLaMA-Factory-0.8.3]# export HF_ENDPOINT=https://hf-mirror.com

可以寫入 ~/.bashrc 永久生效。

3. 確認環境變量生效

[root@server3 LLaMA-Factory-0.8.3]# huggingface-cli env

Copy-and-paste the text below in your GitHub issue.

- huggingface_hub version: 0.24.5

- Platform: Linux-3.10.0-1160.118.1.el7.x86_64-x86_64-with-glibc2.17

- Python version: 3.11.9

- Running in iPython ?: No

- Running in notebook ?: No

- Running in Google Colab ?: No

- Token path ?: /root/.cache/huggingface/token

- Has saved token ?: True

- Who am I ?: richard-open-source

- Configured git credential helpers:

- FastAI: N/A

- Tensorflow: N/A

- Torch: 2.4.0

- Jinja2: 3.1.4

- Graphviz: N/A

- keras: N/A

- Pydot: N/A

- Pillow: 10.4.0

- hf_transfer: N/A

- gradio: 4.43.0

- tensorboard: N/A

- numpy: 1.26.4

- pydantic: 2.9.0

- aiohttp: 3.10.3

- ENDPOINT: https://hf-mirror.com

- HF_HUB_CACHE: /root/.cache/huggingface/hub

- HF_ASSETS_CACHE: /root/.cache/huggingface/assets

- HF_TOKEN_PATH: /root/.cache/huggingface/token

- HF_HUB_OFFLINE: False

- HF_HUB_DISABLE_TELEMETRY: False

- HF_HUB_DISABLE_PROGRESS_BARS: None

- HF_HUB_DISABLE_SYMLINKS_WARNING: False

- HF_HUB_DISABLE_EXPERIMENTAL_WARNING: False

- HF_HUB_DISABLE_IMPLICIT_TOKEN: False

- HF_HUB_ENABLE_HF_TRANSFER: False

- HF_HUB_ETAG_TIMEOUT: 10

- HF_HUB_DOWNLOAD_TIMEOUT: 10

[root@server3 LLaMA-Factory-0.8.3]#

4.1 下載模型

[root@server3 LLaMA-Factory-0.8.3]# huggingface-cli download --resume-download Qwen/Qwen1.5-0.5B-Chat --local-dir ./models/Qwen1.5-0.5B-Chat

4.2 下載數據集

[root@server3 LLaMA-Factory-0.8.3]# huggingface-cli download --repo-type dataset --resume-download alpaca_zh_demo --local-dir ./datasets/alpaca_zh_demo

下載預訓練模型

[root@server3 AIGC]# mkdir models

[root@server3 AIGC]# cd models/

[root@server3 models]# GIT_LFS_SKIP_SMUDGE=1 git clone https://hf-mirror.com/Qwen/Qwen1.5-0.5B-Chat

[root@server3 models]# tree -h Qwen1.5-0.5B-Chat/

Qwen1.5-0.5B-Chat/

├── [ 656] config.json

├── [ 661] config.json.raw

├── [ 206] generation_config.json

├── [7.1K] LICENSE

├── [1.6M] merges.txt

├── [1.2G] model.safetensors

├── [4.2K] README.md

├── [1.3K] tokenizer_config.json

├── [6.7M] tokenizer.json

└── [2.6M] vocab.json

0 directories, 10 files

[root@server3 models]#

下載數據集

默認情況下,LLaMA-Factory項目文件下的data目錄,自帶了一些本地數據集可直接使用。

[root@server3 LLaMA-Factory-0.8.3]# tree -h data/

data/

├── [841K] alpaca_en_demo.json

├── [621K] alpaca_zh_demo.json

├── [ 32] belle_multiturn

│ └── [2.7K] belle_multiturn.py

├── [733K] c4_demo.json

├── [ 13K] dataset_info.json

├── [1.5M] dpo_en_demo.json

├── [833K] dpo_zh_demo.json

├── [722K] glaive_toolcall_en_demo.json

├── [665K] glaive_toolcall_zh_demo.json

├── [ 27] hh_rlhf_en

│ └── [3.3K] hh_rlhf_en.py

├── [ 20K] identity.json

├── [892K] kto_en_demo.json

├── [ 45] mllm_demo_data

│ ├── [ 12K] 1.jpg

│ ├── [ 22K] 2.jpg

│ └── [ 16K] 3.jpg

├── [3.1K] mllm_demo.json

├── [9.8K] README.md

├── [9.2K] README_zh.md

├── [ 27] ultra_chat

│ └── [2.3K] ultra_chat.py

└── [1004K] wiki_demo.txt

4 directories, 20 files

[root@server3 LLaMA-Factory-0.8.3]#

使用準備好的模型與數據集,在單機上進行訓練測試

LLaMA-Factory支持通過WebUI微調大語言模型。在完成安裝后,我們可以使用WebUI進行快速調測驗證,沒問題后可使用命令行工具進行多機分布式訓練。

[root@server3 LLaMA-Factory-0.8.3]# llamafactory-cli webui

[2024-09-23 17:54:45,786] [INFO] [real_accelerator.py:203:get_accelerator] Setting ds_accelerator to cuda (auto detect)

Running on local URL: http://0.0.0.0:7861

To create a public link, set `share=True` in `launch()`.

使用命令行運行多機分布式訓練任務

1. 準備目錄

[root@server3 LLaMA-Factory-0.8.3]# mkdir asterun

[root@server3 LLaMA-Factory-0.8.3]# mkdir -p asterun/saves/qwen/full/sft

2. 根據集群環境和訓練任務,準備分布式訓練的配置文件

[root@server3 LLaMA-Factory-0.8.3]# cat asterun/qwen_full_sft_ds2.yaml

### model

model_name_or_path: /home/lichao/AIGC/models/Qwen1.5-0.5B-Chat

### method

stage: sft

do_train: true

finetuning_type: full

deepspeed: examples/deepspeed/ds_z2_config.json

### dataset

dataset: identity,alpaca_zh_demo

template: llama3

cutoff_len: 1024

max_samples: 1000

overwrite_cache: true

preprocessing_num_workers: 16

### output

output_dir: asterun/saves/qwen/full/sft

logging_steps: 10

save_steps: 500

plot_loss: true

overwrite_output_dir: true

report_to: tensorboard

logging_dir: asterun/saves/qwen/full/sft/runs

### train

per_device_train_batch_size: 1

gradient_accumulation_steps: 2

learning_rate: 1.0e-4

num_train_epochs: 3.0

lr_scheduler_type: cosine

warmup_ratio: 0.1

bf16: true

ddp_timeout: 180000000

### eval

val_size: 0.1

per_device_eval_batch_size: 1

eval_strategy: steps

eval_steps: 500

[root@server3 LLaMA-Factory-0.8.3]#

3. 用同樣的方式,在Server1和Server2上準備運行環境

步驟略。

4. 依次在集群中的3個GPU節點上啟動分布式訓練任務

主節點rank0:

[root@server3 LLaMA-Factory-0.8.3]# FORCE_TORCHRUN=1 NNODES=3 RANK=0 MASTER_ADDR=172.16.0.13 MASTER_PORT=29500 llamafactory-cli train asterun/qwen_full_sft_ds2.yaml

從節點rank1:

[root@server2 LLaMA-Factory-0.8.3]# FORCE_TORCHRUN=1 NNODES=3 RANK=1 MASTER_ADDR=172.16.0.13 MASTER_PORT=29500 llamafactory-cli train asterun/qwen_full_sft_ds2.yaml

從節點rank2:

[root@server1 LLaMA-Factory-0.8.3]# FORCE_TORCHRUN=1 NNODES=3 RANK=2 MASTER_ADDR=172.16.0.13 MASTER_PORT=29500 llamafactory-cli train asterun/qwen_full_sft_ds2.yaml

推理測試

安裝GGUF庫

下載llama.cpp源碼包到服務器,解壓到工作目錄

[root@server3 AIGC]# unzip llama.cpp-master.zip

[root@server3 AIGC]# cd llama.cpp-master

[root@server3 llama.cpp-master]# ll

總用量 576

-rw-r--r-- 1 root root 33717 9月 26 11:38 AUTHORS

drwxr-xr-x 2 root root 37 9月 26 11:38 ci

drwxr-xr-x 2 root root 164 9月 26 11:38 cmake

-rw-r--r-- 1 root root 6591 9月 26 11:38 CMakeLists.txt

-rw-r--r-- 1 root root 3164 9月 26 11:38 CMakePresets.json

drwxr-xr-x 3 root root 4096 9月 26 11:38 common

-rw-r--r-- 1 root root 2256 9月 26 11:38 CONTRIBUTING.md

-rwxr-xr-x 1 root root 199470 9月 26 11:38 convert_hf_to_gguf.py

-rwxr-xr-x 1 root root 15993 9月 26 11:38 convert_hf_to_gguf_update.py

-rwxr-xr-x 1 root root 19106 9月 26 11:38 convert_llama_ggml_to_gguf.py

-rwxr-xr-x 1 root root 14901 9月 26 11:38 convert_lora_to_gguf.py

drwxr-xr-x 4 root root 109 9月 26 11:38 docs

drwxr-xr-x 43 root root 4096 9月 26 11:38 examples

-rw-r--r-- 1 root root 1556 9月 26 11:38 flake.lock

-rw-r--r-- 1 root root 7469 9月 26 11:38 flake.nix

drwxr-xr-x 5 root root 85 9月 26 11:38 ggml

drwxr-xr-x 6 root root 116 9月 26 11:38 gguf-py

drwxr-xr-x 2 root root 154 9月 26 11:38 grammars

drwxr-xr-x 2 root root 21 9月 26 11:38 include

-rw-r--r-- 1 root root 1078 9月 26 11:38 LICENSE

-rw-r--r-- 1 root root 50865 9月 26 11:38 Makefile

drwxr-xr-x 2 root root 163 9月 26 11:38 media

drwxr-xr-x 2 root root 4096 9月 26 11:38 models

-rw-r--r-- 1 root root 163 9月 26 11:38 mypy.ini

-rw-r--r-- 1 root root 2044 9月 26 11:38 Package.swift

drwxr-xr-x 3 root root 40 9月 26 11:38 pocs

-rw-r--r-- 1 root root 124786 9月 26 11:38 poetry.lock

drwxr-xr-x 2 root root 4096 9月 26 11:38 prompts

-rw-r--r-- 1 root root 1280 9月 26 11:38 pyproject.toml

-rw-r--r-- 1 root root 528 9月 26 11:38 pyrightconfig.json

-rw-r--r-- 1 root root 28481 9月 26 11:38 README.md

drwxr-xr-x 2 root root 4096 9月 26 11:38 requirements

-rw-r--r-- 1 root root 505 9月 26 11:38 requirements.txt

drwxr-xr-x 2 root root 4096 9月 26 11:38 scripts

-rw-r--r-- 1 root root 5090 9月 26 11:38 SECURITY.md

drwxr-xr-x 2 root root 97 9月 26 11:38 spm-headers

drwxr-xr-x 2 root root 289 9月 26 11:38 src

drwxr-xr-x 2 root root 4096 9月 26 11:38 tests

[root@server3 llama.cpp-master]#

進入gguf-py子目錄,安裝GGUF庫

[root@server3 llama.cpp-master]# cd gguf-py

[root@server3 gguf-py]# ll

總用量 12

drwxr-xr-x 2 root root 40 9月 26 11:38 examples

drwxr-xr-x 2 root root 230 9月 26 11:38 gguf

-rw-r--r-- 1 root root 1072 9月 26 11:38 LICENSE

-rw-r--r-- 1 root root 1049 9月 26 11:38 pyproject.toml

-rw-r--r-- 1 root root 2719 9月 26 11:38 README.md

drwxr-xr-x 2 root root 151 9月 26 11:38 scripts

drwxr-xr-x 2 root root 71 9月 26 11:38 tests

[root@server3 gguf-py]# pip install --editable .

Looking in indexes: https://mirrors.aliyun.com/pypi/simple/

Obtaining file:///home/lichao/AIGC/llama.cpp-master/gguf-py

Installing build dependencies ... done

Checking if build backend supports build_editable ... done

Getting requirements to build editable ... done

Preparing editable metadata (pyproject.toml) ... done

Requirement already satisfied: numpy>=1.17 in /home/lichao/opt/python3.11.9/lib/python3.11/site-packages (from gguf==0.10.0) (1.26.4)

Requirement already satisfied: pyyaml>=5.1 in /home/lichao/opt/python3.11.9/lib/python3.11/site-packages (from gguf==0.10.0) (6.0.2)

Requirement already satisfied: sentencepiece<=0.2.0,?>=0.1.98 in /home/lichao/opt/python3.11.9/lib/python3.11/site-packages (from gguf==0.10.0) (0.2.0)

Requirement already satisfied: tqdm>=4.27 in /home/lichao/opt/python3.11.9/lib/python3.11/site-packages (from gguf==0.10.0) (4.66.5)

Building wheels for collected packages: gguf

Building editable for gguf (pyproject.toml) ... done

Created wheel for gguf: filename=gguf-0.10.0-py3-none-any.whl size=3403 sha256=4a0851426e263076c64c9854be9dfe95493844062484d001fddb08c1be5fa2ca

Stored in directory: /tmp/pip-ephem-wheel-cache-iiq8ofh3/wheels/80/80/9b/c6c23d750f4bd20fc0c2c75e51253d89c61a2369247fb694db

Successfully built gguf

Installing collected packages: gguf

Successfully installed gguf-0.10.0

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager, possibly rendering your system unusable.It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv. Use the --root-user-action option if you know what you are doing and want to suppress this warning.

[root@server3 gguf-py]#

模型格式轉換

將之前微調訓練生成的safetensors格式的模型,轉換為gguf格式

[root@server3 gguf-py]# cd ..

[root@server3 llama.cpp-master]# python3 convert_hf_to_gguf.py /home/lichao/AIGC/LLaMA-Factory-0.8.3/asterun/saves/qwen/full/sft

INFO:hf-to-gguf:Loading model: sft

INFO:gguf.gguf_writer:gguf: This GGUF file is for Little Endian only

INFO:hf-to-gguf:Exporting model...

INFO:hf-to-gguf:gguf: loading model part 'model.safetensors'

INFO:hf-to-gguf:output.weight, torch.bfloat16 --> F16, shape = {1024, 151936}

INFO:hf-to-gguf:token_embd.weight, torch.bfloat16 --> F16, shape = {1024, 151936}

INFO:hf-to-gguf:blk.0.attn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.0.ffn_down.weight, torch.bfloat16 --> F16, shape = {2816, 1024}

INFO:hf-to-gguf:blk.0.ffn_gate.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.0.ffn_up.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.0.ffn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.0.attn_k.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.0.attn_k.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.0.attn_output.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.0.attn_q.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.0.attn_q.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.0.attn_v.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.0.attn_v.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.1.attn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.1.ffn_down.weight, torch.bfloat16 --> F16, shape = {2816, 1024}

INFO:hf-to-gguf:blk.1.ffn_gate.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.1.ffn_up.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.1.ffn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.1.attn_k.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.1.attn_k.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.1.attn_output.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.1.attn_q.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.1.attn_q.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.1.attn_v.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.1.attn_v.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.10.attn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.10.ffn_down.weight, torch.bfloat16 --> F16, shape = {2816, 1024}

INFO:hf-to-gguf:blk.10.ffn_gate.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.10.ffn_up.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.10.ffn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.10.attn_k.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.10.attn_k.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.10.attn_output.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.10.attn_q.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.10.attn_q.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.10.attn_v.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.10.attn_v.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.11.attn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.11.ffn_down.weight, torch.bfloat16 --> F16, shape = {2816, 1024}

INFO:hf-to-gguf:blk.11.ffn_gate.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.11.ffn_up.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.11.ffn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.11.attn_k.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.11.attn_k.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.11.attn_output.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.11.attn_q.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.11.attn_q.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.11.attn_v.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.11.attn_v.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.12.attn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.12.ffn_down.weight, torch.bfloat16 --> F16, shape = {2816, 1024}

INFO:hf-to-gguf:blk.12.ffn_gate.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.12.ffn_up.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.12.ffn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.12.attn_k.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.12.attn_k.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.12.attn_output.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.12.attn_q.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.12.attn_q.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.12.attn_v.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.12.attn_v.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.13.attn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.13.ffn_down.weight, torch.bfloat16 --> F16, shape = {2816, 1024}

INFO:hf-to-gguf:blk.13.ffn_gate.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.13.ffn_up.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.13.ffn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.13.attn_k.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.13.attn_k.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.13.attn_output.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.13.attn_q.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.13.attn_q.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.13.attn_v.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.13.attn_v.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.14.attn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.14.ffn_down.weight, torch.bfloat16 --> F16, shape = {2816, 1024}

INFO:hf-to-gguf:blk.14.ffn_gate.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.14.ffn_up.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.14.ffn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.14.attn_k.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.14.attn_k.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.14.attn_output.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.14.attn_q.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.14.attn_q.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.14.attn_v.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.14.attn_v.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.15.attn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.15.ffn_down.weight, torch.bfloat16 --> F16, shape = {2816, 1024}

INFO:hf-to-gguf:blk.15.ffn_gate.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.15.ffn_up.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.15.ffn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.15.attn_k.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.15.attn_k.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.15.attn_output.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.15.attn_q.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.15.attn_q.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.15.attn_v.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.15.attn_v.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.16.attn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.16.ffn_down.weight, torch.bfloat16 --> F16, shape = {2816, 1024}

INFO:hf-to-gguf:blk.16.ffn_gate.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.16.ffn_up.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.16.ffn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.16.attn_k.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.16.attn_k.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.16.attn_output.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.16.attn_q.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.16.attn_q.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.16.attn_v.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.16.attn_v.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.17.attn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.17.ffn_down.weight, torch.bfloat16 --> F16, shape = {2816, 1024}

INFO:hf-to-gguf:blk.17.ffn_gate.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.17.ffn_up.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.17.ffn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.17.attn_k.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.17.attn_k.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.17.attn_output.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.17.attn_q.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.17.attn_q.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.17.attn_v.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.17.attn_v.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.18.attn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.18.ffn_down.weight, torch.bfloat16 --> F16, shape = {2816, 1024}

INFO:hf-to-gguf:blk.18.ffn_gate.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.18.ffn_up.weight, torch.bfloat16 --> F16, shape = {1024, 2816}

INFO:hf-to-gguf:blk.18.ffn_norm.weight, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.18.attn_k.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.18.attn_k.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.18.attn_output.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.18.attn_q.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.18.attn_q.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.18.attn_v.bias, torch.bfloat16 --> F32, shape = {1024}

INFO:hf-to-gguf:blk.18.attn_v.weight, torch.bfloat16 --> F16, shape = {1024, 1024}

INFO:hf-to-gguf:blk.19.attn_norm.weight, torch.bfloat16 --> F32, shape = {1024}