大佬們好,分享一下我用魯班貓做ros主控,stm32f407做底層驅(qū)動的一個(gè)ros小車。

目的是識別煙霧并凈化:凈化是用的負(fù)離子發(fā)生器(效果如文章頂部視頻,凈化還是很頂?shù)模饧由热~將其擴(kuò)散出去。同時(shí)也具有環(huán)境氣體濃度(質(zhì)量)檢測的功能。

b站鏈接:

https://www.bilibili.com/video/BV1hh4y1n7Fz/?vd_source=4fa660ff7e4423139e6ebdbd4dece6c7

這是我去年12月底開始做的,入坑魯班貓算是比較早了。在讀大三學(xué)生。正奧里給考研中。。。

最底下還塞了一塊vet6和一塊esp32.

板子上加了個(gè)風(fēng)扇,為了散熱快。

魯班貓1s做ROS主控用于ros建圖(gmapping)和導(dǎo)航,同時(shí)接入NPU做抽煙監(jiān)測,模型是yolov5自己訓(xùn)練的模型轉(zhuǎn)化成rknn部署在板子上。

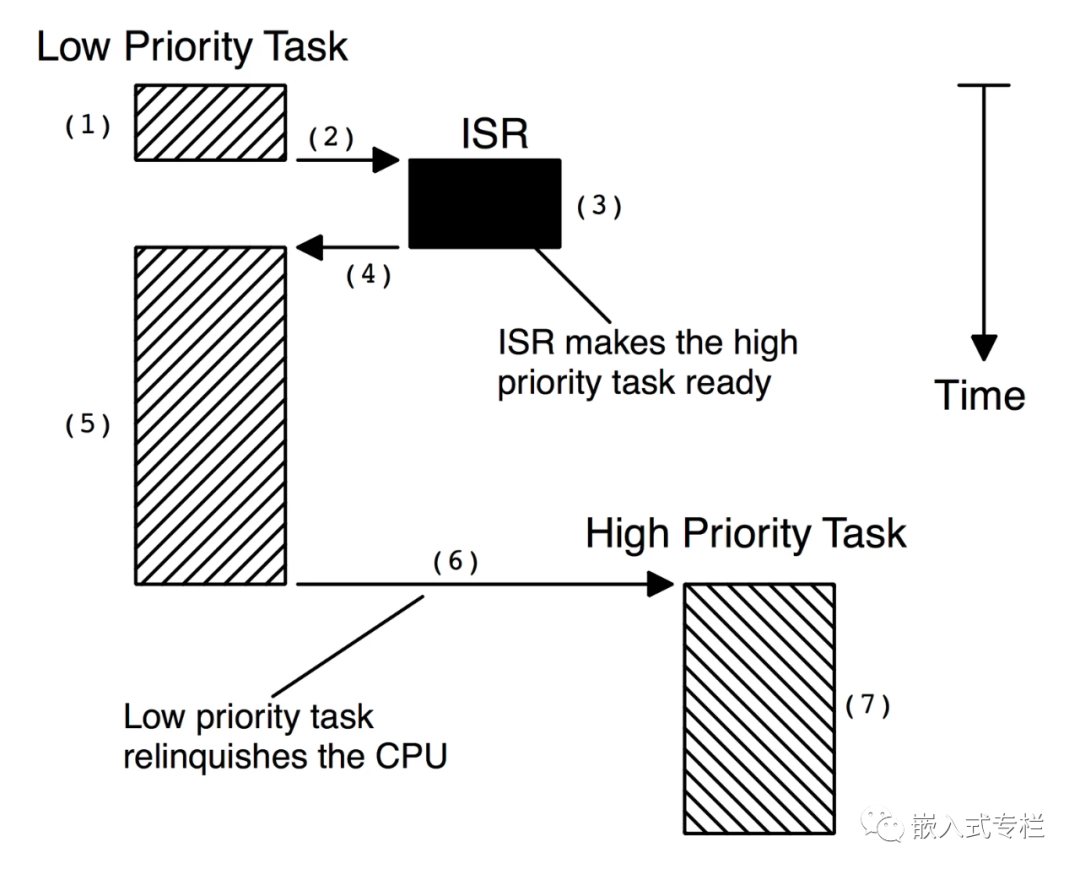

功能部分即功能層的stm32與串口屏、esp32通信部分。功能層的主要目的是獲取傳感器數(shù)據(jù)和通過繼電器控制小車前端的負(fù)離子發(fā)生器和兩個(gè)加快負(fù)離子擴(kuò)散的風(fēng)扇。這里的stm32相當(dāng)于一個(gè)中轉(zhuǎn),用的是rt—thread實(shí)時(shí)操作系統(tǒng),版本是4.0.2(寫的比較早,當(dāng)時(shí)的rtt還有小bug,現(xiàn)在已經(jīng)很好用了。)

開啟三個(gè)串口:一個(gè)用于讀取傳感器,一個(gè)用于接收和發(fā)送指令給串口屏,一個(gè)用于給esp32傳輸數(shù)據(jù),通過esp32將數(shù)據(jù)發(fā)送到巴法云平臺,做接入小程序中轉(zhuǎn)。

篇幅有限,代碼放在了網(wǎng)盤上。

鏈接:https://pan.baidu.com/s/1ltgypPMq9heezk412r4IKw?pwd=jhzs

提取碼:jhzs

因?yàn)橛玫氖莚tt,移植性很高,故只寫了應(yīng)用層的main.c函數(shù)。如下:

氣體傳感器如下(所用的是串口協(xié)議)

#include <rtthread.h>

/ *串口1用來調(diào)試* /

#define DBG_TAG \"main\"

#define DBG_LVL DBG_LOG

#include <rtdbg.h>

#include <string.h>

#include <serial.h>//此處有坑,要改頭文件路徑為rt-thread/components/drivers/include/drivers

#include <stdio.h>

#include \"stdlib.h\"

#defineleft_motor_run{rt_pin_write(6,PIN_LOW );rt_pin_write(7,PIN_HIGH);}

#defineleft_motor_back{rt_pin_write(6,PIN_HIGH );rt_pin_write(7,PIN_LOW);}

#definestoping{rt_pin_write(6,PIN_HIGH );rt_pin_write(7,PIN_HIGH);rt_pin_write(16,PIN_HIGH );rt_pin_write(17,PIN_HIGH);}

#defineright_motor_run{rt_pin_write(16,PIN_LOW );rt_pin_write(17,PIN_HIGH);}

#defineright_motor_back{rt_pin_write(16,PIN_HIGH );rt_pin_write(17,PIN_LOW);}

#define key1_openrt_pin_write(51,PIN_LOW );//d3

#define key1_closert_pin_write(51,PIN_HIGH );

#define key2_openrt_pin_write(52,PIN_LOW );//d4

#define key2_closert_pin_write(52,PIN_HIGH );

#define key3_openrt_pin_write(53,PIN_LOW );//d5

#define key3_closert_pin_write(53,PIN_HIGH );

/*micropython esp32與rtt串口DMA傳輸數(shù)據(jù)時(shí)有坑,

* 需在drv_usart.c找到HAL_UART_RxCpltCallback和HAL_UART_RxHalfCpltCallback將dma_isr(&uart->serial)注釋掉,

* 能降低數(shù)據(jù)錯(cuò)誤率*/

/ *串口2的變量 115200* /

struct serial_configureuar2_configs = RT_SERIAL_CONFIG_DEFAULT;

rt_sem_t sem2;

rt_device_t uar2_dev;

rt_thread_t uar_2_th;

rt_thread_t uar_2_deal;

char buffer[128] = {0};

rt_size_t rxlen2 = 0;

/ *串口3的變量 9600* /

struct serial_configureuar3_configs = MY_SERIAL_CONFIG_DEFAULT;

rt_sem_t sem3;

rt_device_t uar3_dev;

rt_thread_t uar_3_th;

uint8_t buffer3[17] = {0};

rt_size_t rxlen3 = 0;

/ *串口4的變量 115200* /

struct serial_configureuar4_configs = RT_SERIAL_CONFIG_DEFAULT;

rt_sem_t sem4;

rt_device_t uar4_dev;

rt_thread_t uar_4_th;

rt_uint8_t buffer4[256] = {0xff};

rt_size_t rxlen4 = 0;

//char deal;

rt_uint8_t deal ;

char wheater[8];

char humidity[4];

char temperature[4];

char wind_speed[4];

char shi[3];

char miao[3];

char fen[3];

char wheater_deal[23]=\"main2.g3.txt=\"\";

char humidity_deal[18]=\"main2.g1.txt=\"\";

char temperature_deal[17]=\"main2.g0.txt=\"\";

char wind_speed_deal[19]=\"main2.g2.txt=\"\";

char shi_deal[15] = \"main.z1.val=\";

char miao_deal[15] = \"main.z0.val=\";

char fen_deal[15] = \"main.z2.val=\";

char end[2]=\"\"\";

char xf_end[3];//串口屏控制幀尾

void uar2_thread_entry(void *parameter)//串口2DMA線程入口

{

rt_size_t len = 0;

/*發(fā)送ch2o數(shù)據(jù)*/

rt_device_write(uar4_dev,0,ch2o_date,sizeof(ch2o_date));

rt_device_write(uar4_dev,0 ,xf_end,sizeof(xf_end));

/*end*/

/*發(fā)送tvoc數(shù)據(jù)*/

rt_device_write(uar4_dev,0 ,tvoc_date,sizeof(tvoc_date));

rt_device_write(uar4_dev,0 ,xf_end,sizeof(xf_end));

/*end*/

/*發(fā)送pm2.5數(shù)據(jù)*/

rt_device_write(uar4_dev,0 ,pm2_5_date,sizeof(pm2_5_date));

rt_device_write(uar4_dev,0 ,xf_end,sizeof(xf_end));

/*end*/

/*發(fā)送pm10數(shù)據(jù)*/

rt_device_write(uar4_dev,0 ,pm10_date,sizeof(pm10_date));

rt_device_write(uar4_dev,0 ,xf_end,sizeof(xf_end));

/*end*/

/*發(fā)送temp數(shù)據(jù)*/rt_device_write(uar4_dev,0,temp_date,sizeof(temp_date));

rt_device_write(uar4_dev,0 ,xf_end,sizeof(xf_end));

/*end*/

/*發(fā)送humi數(shù)據(jù)*/

rt_device_write(uar4_dev,0,humi_date,sizeof(humi_date));

rt_device_write(uar4_dev,0 ,xf_end,sizeof(xf_end));

/*end*/

memset(buffer4, 0, sizeof buffer4);

// }

}

}

rt_err_t uar3_rxback(rt_device_t dev, rt_size_t size)//串口3接收//回調(diào)函數(shù)9600

{

rxlen3 = size;

rt_sem_release(sem3);

return RT_EOK;

}

void uar4_thread_entry(void *parameter)//串口4DMA線程入口

{

rt_size_t len = 0;

while(1)

{

rt_sem_take(sem4, RT_WAITING_FOREVER);

len = rt_device_read(uar4_dev, 0, buffer4, rxlen4);

buffer4[len] = \'\\\\0\';

rt_kprintf(\"%c\\\\n\",len);

if (buffer4[0] == 0x02) {

deal=0x02;

rt_device_write(uar2_dev,0 ,&deal,16);

rt_kprintf(\"uart4\");

deal=0;

}

if (buffer4[0] == 0x01) {

deal=0x01;

rt_device_write(uar2_dev,0 ,&deal,16);

rt_kprintf(\"uart4\");

deal=0;

}

if (buffer4[0] == 0x03) {

deal=0x03;

//memset(buffer,0,sizeof(buffer));

rt_device_write(uar2_dev,0 ,&deal,16);

rt_kprintf(\"uart4\");

deal=0;

}

switch(buffer4[0])

{

case 0x44: key1_open;break;

case 0x55: key1_close;break;

case 0x66: key2_open;break;

case 0x77: key2_close;break;

case 0x88: key3_open;break;

case 0x99: key3_close;break;

}

}

}

rt_err_t uar4_rxback(rt_device_t dev, rt_size_t size)//串口4接收回調(diào)函數(shù)

{

rxlen4 = size;

rt_sem_release(sem4);

return RT_EOK;

}

void clearmachine_and_motor_pin_init()

{

rt_pin_mode(6,PIN_MODE_OUTPUT );//a6

rt_pin_mode(7,PIN_MODE_OUTPUT );//a7

rt_pin_mode(16,PIN_MODE_OUTPUT );//b0

rt_pin_mode(17,PIN_MODE_OUTPUT );//b1

rt_pin_mode(28, PIN_MODE_INPUT);//b12 left

rt_pin_mode(29,PIN_MODE_INPUT);//b13right

rt_pin_mode(51,PIN_MODE_OUTPUT );//d3

rt_pin_mode(52,PIN_MODE_OUTPUT );//d4

rt_pin_mode(53,PIN_MODE_OUTPUT );//d5

key1_close;

key2_close;

key3_close;

}

int main(void)

{

clearmachine_and_motor_pin_init();//引腳初始化

/*串口2 DMA初始化*/

uar2_dev = rt_device_find(\"uart2\");

if (uar2_dev == NULL) {

LOG_E(\"rt_device_find[uart2] FAILED...\\\\\\\\\\\\\\\\n\");

return -EINVAL;

}

rt_device_open(uar2_dev, RT_DEVICE_OFLAG_RDWR | RT_DEVICE_FLAG_DMA_RX);

rt_device_control(uar2_dev, RT_DEVICE_CTRL_CONFIG, (void *)&uar2_configs);

rt_device_set_rx_indicate(uar2_dev, uar2_rxback);

uar_2_th = rt_thread_create(\"uar2_rx_thread\", uar2_thread_entry, NULL, 4096, 10, 5);

rt_thread_startup(uar_2_th);

sem2 = rt_sem_create(\"sem2\", 1, RT_IPC_FLAG_FIFO);

if(sem2 == RT_NULL){

LOG_E(\"sem2 rt_sem_create failed...\\\\\\\\\\\\\\\\n\");

return -ENOMEM;

}

LOG_D(\"sem2 rt_sem_create successed...\\\\\\\\\\\\\\\\n\");

/*串口2 DMA初始化結(jié)束*/

//uar_2_deal = rt_thread_create(\"uar2_deal\", uar2_deal_entry, NULL, 512, 13, 5);

//rt_thread_startup(uar_2_deal);

/*串口3 DMA初始化9600*/

uar3_dev = rt_device_find(\"uart3\");

if (uar3_dev == NULL) {

LOG_E(\"rt_device_find[uart3] FAILED...\\\\\\\\\\\\\\\\n\");

return -EINVAL;

}

rt_device_open(uar3_dev, RT_DEVICE_OFLAG_RDWR | RT_DEVICE_FLAG_DMA_RX);

rt_device_control(uar3_dev, RT_DEVICE_CTRL_CONFIG, (void *)&uar3_configs);

rt_device_set_rx_indicate(uar3_dev, uar3_rxback);

uar_3_th = rt_thread_create(\"uar3_rx_thread\", uar3_thread_entry, NULL, 4096, 12, 5);

rt_thread_startup(uar_3_th);

sem3 = rt_sem_create(\"sem3\", 1, RT_IPC_FLAG_FIFO);

if(sem3 == RT_NULL){

LOG_E(\"sem3 rt_sem_create failed...\\\\\\\\\\\\\\\\n\");

return -ENOMEM;

}

LOG_D(\"sem3 rt_sem_create successed...\\\\\\\\\\\\\\\\n\");

/*串口3 DMA初始化結(jié)束*/

/*串口4 DMA初始化*/

uar4_dev = rt_device_find(\"uart4\");

if (uar4_dev == NULL) {

LOG_E(\"rt_device_find[uart4] FAILED...\\\\\\\\\\\\\\\\n\");

return -EINVAL;

}

rt_device_open(uar4_dev, RT_DEVICE_OFLAG_RDWR | RT_DEVICE_FLAG_DMA_RX);

rt_device_control(uar4_dev, RT_DEVICE_CTRL_CONFIG, (void *)&uar4_configs);

rt_device_set_rx_indicate(uar4_dev, uar4_rxback);

uar_4_th = rt_thread_create(\"uar4_rx_thread\", uar4_thread_entry, NULL, 4096, 11, 5);

rt_thread_startup(uar_4_th);

sem4 = rt_sem_create(\"sem4\", 4, RT_IPC_FLAG_FIFO);

if(sem4 == RT_NULL){

LOG_E(\"sem4 rt_sem_create failed...\\\\\\\\\\\\\\\\n\");

return -ENOMEM;

}

LOG_D(\"sem4 rt_sem_create successed...\\\\\\\\\\\\\\\\n\");

/*串口4 DMA初始化結(jié)束*/

return RT_EOK;

}

最后是串口屏顯示,同時(shí)數(shù)據(jù)也能在微信小程序上查看

接下來是魯班貓1s做ros主控的部分。

1、移植輪趣大佬的ros源碼:

根據(jù)我現(xiàn)有的硬件:思嵐a1雷達(dá)、一個(gè)usb rgb攝像頭選擇合適的功能包,然后開始移植。

中途會出現(xiàn)很多錯(cuò)誤。例如缺少部分功能包,sudo apt install ros-noetic-(包名)【我的ros版本是noetic】。

2、移植完畢后發(fā)現(xiàn)大佬們并沒有使用魯班貓上的npu。所以我嘗試了用npu跑yolov5在debain10的環(huán)境下用python接口效果如下:

Python與c++接口將圖片監(jiān)測改成實(shí)時(shí)攝像頭的代碼:

只需更改cv.Capture()函數(shù)的攝像頭設(shè)備號即可。

鏈接:https://pan.baidu.com/s/1gauOezF-X8ZuvU4b0I4v4A?pwd=jhzs

提取碼:jhzs

Python接口的yolov7只需更改yolov5代碼的錨點(diǎn)即可

以下只列出主函數(shù)部分,完整的在鏈接里。

import urllib

import time

import sys

import numpy as np

import cv2

from rknnlite.api import RKNNLite

#from PIL import Image

RKNN_MODEL = \'mask.rknn\'

IMG_PATH = \'./test.jpg\'

OBJ_THRESH = 0.25

NMS_THRESH = 0.45

IMG_SIZE = 640

......(省略中間部分)

if __name__ == \'__main__\':

# Create RKNN object

rknn = RKNNLite()

# init runtime environment

print(\'--> Load RKNN model\')

ret = rknn.load_rknn(RKNN_MODEL)

#ret = rknn.init_runtime(target=\'rv1126\', device_id=\'256fca8144d3b5af\')

if ret != 0:

print(\'Load RKNN model failed\')

exit(ret)

print(\'done\')

ret = rknn.init_runtime()

if ret != 0:

print(\'Init runtime environment failed!\')

exit(ret)

print(\'done\')

capture = cv2.VideoCapture(9)

ref, frame = capture.read()

if not ref:

raise ValueError(\"error reading\")

fps = 0.0

while(True):

t1 = time.time()

#

ref, frame = capture.read()

if not ref:

break

# BGRtoRGB

frame = cv2.cvtColor(frame,cv2.COLOR_BGR2RGB)

#############

img = frame

img, ratio, (dw, dh) = letterbox(img, new_shape=(IMG_SIZE, IMG_SIZE))

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

# Inference

print(\'--> Running model\')

outputs = rknn.inference(inputs=[img])

input0_data = outputs[0]

input1_data = outputs[1]

input2_data = outputs[2]

input0_data = input0_data.reshape([3, -1]+list(input0_data.shape[-2:]))

input1_data = input1_data.reshape([3, -1]+list(input1_data.shape[-2:]))

input2_data = input2_data.reshape([3, -1]+list(input2_data.shape[-2:]))

input_data = list()

input_data.append(np.transpose(input0_data, (2, 3, 0, 1)))

input_data.append(np.transpose(input1_data, (2, 3, 0, 1)))

input_data.append(np.transpose(input2_data, (2, 3, 0, 1)))

boxes, classes, scores = yolov5_post_process(input_data)

img_1 = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)

#img_1 = img_1[:,:,::-1]

if boxes is not None:

draw(img_1, boxes, scores, classes)

fps= ( fps + (1./(time.time()-t1)) ) / 2

print(\"fps= %.2f\"%(fps))

#img_1 = cv2.putText(frame, \"fps= %.2f\"%(fps), (0, 40), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 255, 0), 2)

cv2.imshow(\"video\",img_1[:,:,::-1])

c= cv2.waitKey(1) & 0xff

if c==27:

capture.release()

break

print(\"Video Detection Done!\")

capture.release()

cv2.destroyAllWindows()

但這還沒有接到ros中,為此我去翻rknn的github找到了接入ros的方法。

Ros功能包如下:

Launch文件:

Yolov5.launch

<param name=\"model_file\" value=\"yolov5s-640-640.rknn\"/>

<param name=\"display_output\" value=\"$(arg display_output)\"/>

<param name=\"prob_threshold\" value=\"0.35\"/>

<param name=\"chip_type\" value=\"$(arg chip_type)\"/>

<remap from=\"/camera/image_raw\" to=\"$(arg camera_topic)\"/>

Camrea.Launch

<param name=\"video_device\" value=\"/dev/$(arg device)\" />

<param name=\"image_width\" value=\"640\" />

<param name=\"image_height\" value=\"480\" />

<param name=\"framerate\" value=\"30\" />

<param name=\"pixel_format\" value=\"yuyv\" />

<param name=\"camera_frame_id\" value=\"usn_cam\" />

<param name=\"io_method\" value=\"mmap\"/>

<param name=\"camera_name\" value=\"usn_cam\"/>

啟動攝像頭

默認(rèn)的攝像頭設(shè)備號為video0 魯班貓為video9

1、roslaunch rknn_ros camera.launch

2、roslaunch rknn_ros camera.launch device:=video9(可傳參或者改launch)

3、roslaunch rknn_ros yolov5.launch chip_type:=RK3566

鏈接:

鏈接:https://pan.baidu.com/s/1QhfRjDs1sftAB0Q-TS5dBA?pwd=jhzs

提取碼:jhzs

不出意外改好板子型號和對應(yīng)的video就能用了。

可打開rviz或者rqt_image_view查看。

模型是我自己訓(xùn)練的,鏈接如下:

鏈接:https://pan.baidu.com/s/1FSJyW6kp4cy3-yakTq_Q4g?pwd=jhzs

提取碼:jhzs

YOLOV5配置和使用:

官方的源碼是不建議的:

用這個(gè):

https://gitcode.net/mirrors/airockchip/yolov5?utm_source=csdn_github_accelerator

這是瑞芯微官方推薦的源碼,但是也需要更改。

yolov5-master\\\\models下的yolo.py

找到

def forward(self, x):

函數(shù),更改為:

def forward(self, x):

z = []# inference output

for i in range(self.nl):

if os.getenv(\'RKNN_model_hack\', \'0\') != \'0\':

z.append(torch.sigmoid(self.m[i](x[i])))

continue

x[i] = self.m[i](x[i])# conv

\'\'\'

bs, _, ny, nx = x[i].shape# x(bs,255,20,20) to x(bs,3,20,20,85)

x[i] = x[i].view(bs, self.na, self.no, ny, nx).permute(0, 1, 3, 4, 2).contiguous()

if not self.training:# inference

if self.onnx_dynamic or self.grid[i].shape[2:4] != x[i].shape[2:4]:

self.grid[i], self.anchor_grid[i] = self._make_grid(nx, ny, i)

y = x[i].sigmoid()

if self.inplace:

y[..., 0:2] = (y[..., 0:2] * 2 + self.grid[i]) * self.stride[i]# xy

y[..., 2:4] = (y[..., 2:4] * 2) ** 2 * self.anchor_grid[i]# wh

else:# for YOLOv5 on AWS Inferentia https://github.com/ultralytics/yolov5/pull/2953

xy, wh, conf = y.split((2, 2, self.nc + 1), 4)# y.tensor_split((2, 4, 5), 4)# torch 1.8.0

xy = (xy * 2 + self.grid[i]) * self.stride[i]# xy

wh = (wh * 2) ** 2 * self.anchor_grid[i]# wh

y = torch.cat((xy, wh, conf), 4)

z.append(y.view(bs, -1, self.no))

if os.getenv(\'RKNN_model_hack\', \'0\') != \'0\':

return z

return x if self.training else (torch.cat(z, 1),) if self.export else (torch.cat(z, 1), x)

\'\'\'

return x[0],x[1],x[2]

這樣就可以在pt權(quán)重轉(zhuǎn)onnx時(shí)去掉最后一個(gè)Detect層。

pt轉(zhuǎn)onnx指令

python export.py --weights yolov5s.pt --img 640 --batch 1 --opset 11 --include onnx

紅色字體部分換成要轉(zhuǎn)換的權(quán)重文件例如我的就是:

**python export.py --weights ** **weights/best.pt ** --img 640 --batch 1 --opset 11 --include onnx

opset選擇11。

這樣出來的模型是有三個(gè)節(jié)點(diǎn)的模型,才是可用的。

可用netron查看:

netron:https://netron.app/(瀏覽器網(wǎng)址)

將模型拖到頁面可查看。

有三個(gè)輸出節(jié)點(diǎn)。

且要記好三個(gè)節(jié)點(diǎn)的名字。

在官方要求的ubuntu pc端上進(jìn)行模型轉(zhuǎn)換。

我這里有個(gè)改好的yolov5源碼(里面是我訓(xùn)練的抽煙監(jiān)測模型)

網(wǎng)盤鏈接如下:

鏈接:https://pan.baidu.com/s/1fXKNoXhu4m1SmTr4fc-afg?pwd=jhzs

提取碼:jhzs

Chatgpt部分是b站機(jī)器人阿杰github開源項(xiàng)目。

https://www.bilibili.com/video/BV12M4y1R76M/?spm_id_from=333.788

效果如圖:

嗚。。。不要看問得什么

整車的sw模型鏈接:soildwork2020及以上版本可直接打開

鏈接:https://pan.baidu.com/s/1KqB1SOD418dCvyDaZFMgpg?pwd=jhzs

提取碼:jhzs當(dāng)時(shí)還理想化的擼了個(gè)履帶,可后來發(fā)現(xiàn)打印出來根本用不了,故放棄,換成了輪子。

放鏈接是希望能夠幫到像我一樣步步踩坑的菜鳥級選手。我是老踩坑怪了。

有不當(dāng)?shù)牡胤剑€望大佬們海涵。

2023-03-24 20:05:14

電子發(fā)燒友App

電子發(fā)燒友App

評論